smiller29

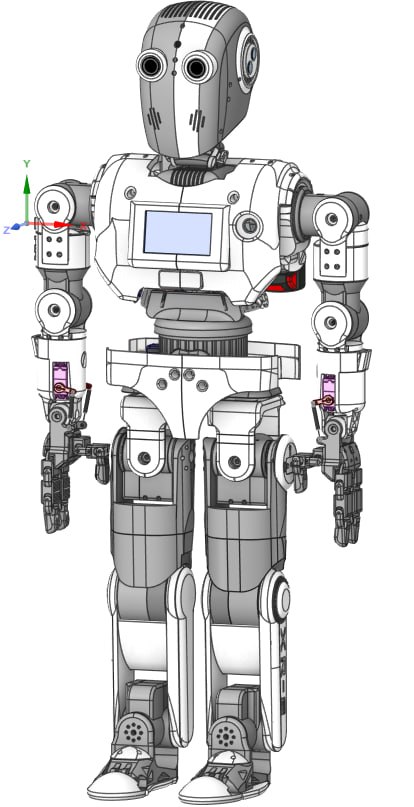

We Are Getting Ready To Release Our Next Generation Open Source Robot The XR-2

The XR-2 robot platform, available at Robots Builder, is designed with user-friendliness in mind, emphasizing accessibility and ease of assembly. Developed to make robotics more approachable, the XR-2 has been meticulously engineered for easy 3D printing and assembly using standard components like servos and bearings. This platform is a significantly updated version of the XR-1, which was released last year. We've addressed several weaknesses from the XR-1 and added many new features to enhance the XR-2.

The XR-2 supports two single-board computers (SBCs). It uses a PC mounted behind the display in the chest to support Synthiam ARC, allowing for seamless robot programming and control. Additionally, it has a designated space for mounting a Jetson Orin Nano just above the PC below the neck, which enhances its onboard AI server capabilities. On the back, there is a custom servo breakout board featuring two Mega Minis and USB ports. The power supply consists of two 20V power tool batteries. The robot boasts 49 degrees of freedom and supports two cameras. There is also space to accommodate up to 10 small Arduinos or sensors in each leg, allowing for extensive customization. Almost every joint in the robot uses bearings to relieve structural stress from the servos. However, it is important to note that the XR-2 is a relatively heavy robot.

Our commitment to simplifying the building process ensures that enthusiasts and robot hobbyists of all skill levels can engage in constructing their own robots. We've invested time in perfecting the design, ensuring that users can quickly transition to the exciting aspects of teaching and programming their robots. The XR-2 is highly customizable, featuring a variety of hands and heads to choose from, allowing users to personalize their creations. To foster a collaborative and open community, we're proud to announce that everything about the XR-2 is open source, inviting users to contribute, modify, and share their innovations with the global robotics community. Get ready to embark on a journey of creativity and exploration with the XR-2!

For more information, check out the following link in a few weeks as we will be updating the website with the new XR-2 files: Robots Builder

DJ, wow thank you for putting all that together!!!

In the following video you will see a where they setup a LLM Chatbot using whipper STT and Piper TTS. You will also see it is preforming reasonably fast.

If there is a way to also get vision linked in to this that would add a big plus. In my case I would like to off load most of the AI stuff to the Nano and use ARC to leverage it and do everything else.

What I would like to be able to do is leverage these services by sending calls to the Jetson Speech to text and Text to Speech. Then I could use these calls in ARC for logic function development. I am not looking to use this as a server outside the robot. I want this to be on board.

There are many more examples of this type of thing using other LLM's I just want to get conversational type speech out and be to provide input using speech. I hope this makes sense to you and others reading this.

The details I provided should get your ball rolling. Inference on an image is going to be challenging on the nano, but it might be possible to some extent.

I'd suggest getting your LLM running and exposing the API using the compatible Open AI SDK protocol. That way, all AI products will be able to talk to your nano server. The Open AI SDK protocol is the most common, so it'll be what you want to expose on the server.

You can test the LLM by pointing the endpoint configuration of the open ai chat gpt robot skill to your server. However, be sure to disable images in the robot skill because the nano will not run inference on images right away. But I recommend one step at a time. Get the LLM working for a "chatbot," and then you can start focusing on more functions.

Hugging Face has a ridiculous number of models, but the problem with Hugging Face is they're specific to configurations and environments.

DJ, wow it looks like you have been playing with the Jetson Orin Nano based on your above post. Thank you so much for your feedback.

Is the Jetson Nano on board the robot? Unless you are using it for specific ML or AI related tasks in control of sensors and actuators that need a fast response I would honestly not have it in the robot. It doesn’t support windows that I am aware of so can’t run ARC so if you do need on board compute for local devices you are probably better off with an X86. if you are going to offload AI to an ARM and GPU the Jetson Nano is not ideal for LLM as it doesn’t have a lot of VRAM so you may as well send that to the cloud or a high end GPU like a RTX 4090 or 5090 with sufficient VRAM to do inference. There are a few small 4 bit gguf models (phi Gemma llama) that will run on the 4Gb / 8Gb jetson nano but unless you are going to be fine tuning them for a specific task they really won’t provide a lot of value.

If you read the main post you will see it has a Windows 11 PC running ARC and a host of Arduino’s running EZB firmware connected by USB’s and it also has the option to install a second SBC Jetson Orin Nano to support onboard AI services if you choose to add one.

I agree the Jetson Orin Nano is not as powerful as the cloud AI servers but at the same time it is a very capable SBC that can add a lot of value to your robot build.

Me personally I would love to use the Jetson as person AI learning assistant in my robot and also be able to leverage the input and outputs of STT and TTS by ARC scripts. If I can do more that would be an added plus.

Hi smiller, have you tested the walking capabilities with a prototype? How does the robot keep balance?

@Dark Harvest, The robot has the ability to walk but making it walk is part of your journey. It has the ability to have many sensors added to it to help with this. We also make a controller board that supports feedback if you decide to modify your servos and develop programming that supports the feedback.

We want to find a way to use the AI to help with that but once again that is part of the journey.

With this being an Open Source project we hope builders will share with others when it comes to using ARC and the Jetson. We want to hear what skills and services they are using. This is to be used as a learning tool in this hobby space.

I just wanted to post some more pictures of the XR2 test build.

He is 4 foot tall.