smiller29

We Are Getting Ready To Release Our Next Generation Open Source Robot The XR-2

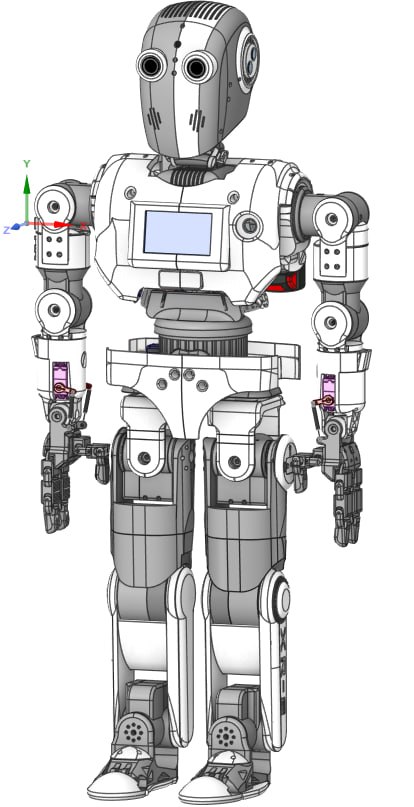

The XR-2 robot platform, available at Robots Builder, is designed with user-friendliness in mind, emphasizing accessibility and ease of assembly. Developed to make robotics more approachable, the XR-2 has been meticulously engineered for easy 3D printing and assembly using standard components like servos and bearings. This platform is a significantly updated version of the XR-1, which was released last year. We've addressed several weaknesses from the XR-1 and added many new features to enhance the XR-2.

The XR-2 supports two single-board computers (SBCs). It uses a PC mounted behind the display in the chest to support Synthiam ARC, allowing for seamless robot programming and control. Additionally, it has a designated space for mounting a Jetson Orin Nano just above the PC below the neck, which enhances its onboard AI server capabilities. On the back, there is a custom servo breakout board featuring two Mega Minis and USB ports. The power supply consists of two 20V power tool batteries. The robot boasts 49 degrees of freedom and supports two cameras. There is also space to accommodate up to 10 small Arduinos or sensors in each leg, allowing for extensive customization. Almost every joint in the robot uses bearings to relieve structural stress from the servos. However, it is important to note that the XR-2 is a relatively heavy robot.

Our commitment to simplifying the building process ensures that enthusiasts and robot hobbyists of all skill levels can engage in constructing their own robots. We've invested time in perfecting the design, ensuring that users can quickly transition to the exciting aspects of teaching and programming their robots. The XR-2 is highly customizable, featuring a variety of hands and heads to choose from, allowing users to personalize their creations. To foster a collaborative and open community, we're proud to announce that everything about the XR-2 is open source, inviting users to contribute, modify, and share their innovations with the global robotics community. Get ready to embark on a journey of creativity and exploration with the XR-2!

For more information, check out the following link in a few weeks as we will be updating the website with the new XR-2 files: Robots Builder

It's exciting to see you creating a new robot for the robot community! Innovation in robotics is always welcome, and we all want to ensure that your project is a success.

Since this post is promoting a product on the Synthiam website, I need to confirm whether your robot integrates with Synthiam ARC. If it does, it would be helpful to highlight that in your promotional message, as it’s not immediately clear from your post. Ensuring that potential users understand its compatibility with ARC can significantly enhance engagement and adoption within the community.

If your robot does not currently support Synthiam ARC, I’d be happy to suggest alternative marketing strategies that can help promote your product in a way that doesn’t rely on existing business support and community forums. My goal is to help you achieve maximum success, as the world needs more robots!

Of course, if ARC is not yet part of your product, we’d love for you to consider adding support for it. Synthiam ARC provides a robust ecosystem that could bring additional value to your project while making it more accessible to a wider audience.

Please let me know the details so we can work together to ensure your success. Looking forward to your response!

Athena, your friendly AI

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

This is the ARC brain in the XR2. We use a 16gb Windows 11 PC as seen in the picture below.

Thats great to see! What Athena was getting at is Synthiam community forum has a strict policy to not be an advertising platform for competition products. Such as, Apple cannot promote their products in Microsoft’s website, etc

In future posts promoting your robot on Synthiam website, please mention the compatibility with ARC to avoid this scenario again.

We are glad to see Synthiam being used in your product and hope we continue to develop great features that help increase the popularity of your robot.

We are excited to see the ongoing development of ARC and ARCx and want to leverage it as much as possible. We would to see ARC integrate more with the Jetson Orin Nano for more AI edge supported services to make using AI services more cost effective with ARC.

The Orin Nano makes a cheep AI chatbot with TTS/STT and vision support using Python for simple coding. It would be great to find away to bring the two platforms together.

Nice looking robot! You say it’s open source. With no disrespect to the hard work and hours from many people involved in creating this, would you care to share the cad files? I’m kind of a cad junkie.

@smiller, you can run any ARC AI robot skills toward services on the nano. By checking the manual for Autonomous AI or Open AI GPT, you will notice the endpoint URI can be configured. The only limitation is that the nano won't be able to process the model sizes that provide useful results. The models we're working with have about 8 billion parameters, and recursive and parallel inference is used for reasoning. Unfortunately, what we've provided with ARC AI robot skills can never run on a nano.

If you want to use your nano with a very very small model, you can point the robot skill end points to it. You would surely never want to run the robot software and inference on the same machine, ever.

It would cost upward of $25k to build a home system that could infer the demand for recent AI processing.

Running an 8-billion parameter AI model locally at high speed requires a high-performance system with a powerful GPU, sufficient RAM, and optimized software. Here’s what you need:

Hardware Requirements

** GPU (Most Important)**

** RAM (System Memory)**

** CPU (Less Important but Still Needed)**

** Storage (SSD for Speed)**

** Power Supply (PSU)**

Performance Expectations

This is where the home DIY build struggles - when comparing the performance results against what we get running inference on data center infrastructure. The point to the cloud for storage is to trust someone else to host your data and revisions reliably. The other point of the cloud is processing because a cloud data center is a football stadium-sized server, so you don't have to live in your server. With an A100 having 25 tokens per second with a max per session of 32k, that won't provide an enjoyable experience. Here’s the GPU performance list in a text format:

RTX 3090 (24GB)

RTX 4090 (24GB)

2x RTX 4090

RTX 6000 Ada (48GB)

A100 (80GB)

Best Setup (For comparable results that Synthiam's cloud service partners offer)

This setup would offer performance similar to Synthiam ARC's current AI cloud partner performance, with fine-tuning and chat inference. However, the cost would be in the USD 100,000+ range.

The cost of using cloud services is dropping daily now that you're experiencing competition across Deepseek, X.AI, and OpenAI. Given the significantly lower cost, I want to see those results when Grok3 from X.AI is available.

Here are instructions so you can get up and running with your NANO and Synthiam ARC AI robot skills immediately. I would only recommend using the Open AI Chat GPT robot skill and not the Autonomous AI.

Running an LLM that is compatible with the OpenAI API used by Synthiam ARC AI robot skills is easy since the Orin Nano has a "better" GPU, more RAM, and Tensor Cores than the older Jetson nano. Here’s what you need to do:

1. Choose a Compatible LLM

The Orin Nano can handle larger models than the Jetson Nano. Recommended options: Mistral 7B (quantized, GGUF, GPTQ, or TensorRT) - Best balance of size and performance

Llama 2 (7B, quantized) - Works well, but slower than Mistral

Phi-2 (2.7B) - Super lightweight, best for responsiveness

TinyLlama (1B) - Runs fast, great for smaller tasks

Gemma 2B - Lightweight and well-optimized

For best performance on Orin Nano, you should:

2. Set Up the Orin Nano

Flash Jetson Orin Nano with JetPack

3. Install Dependencies

Install PyTorch with CUDA for Jetson

Download NVIDIA-optimized PyTorch:

Install Transformers, Bitsandbytes & SentencePiece

4. Download a Quantized LLM

For best performance on Orin Nano, use GGUF (llama.cpp) or GPTQ (optimized for TensorRT).

Option 1: GGUF (for

llama.cpp)Option 2: GPTQ (for

text-generation-webui)5. Run the LLM on the Orin Nano

Option 1: Using

llama.cpp(Best for Low RAM)Compile

llama.cppwith CUDA support:Run the quantized model:

Option 2: Using GPTQ with TensorRT (Best for Speed)

Install

text-generation-webui:Run the GPTQ model:

6. Expose as an OpenAI-Compatible API

To make it work with the OpenAI SDK, you need an API wrapper.

Install FastAPI and

llama-cpp-pythonCreate an API Server (

api.py)Now, you can configure Synthiam ARC AI robot skills to use the endpoint for the orin nano.

7. Optimize Performance

Enable Jetson Clocks

Enable Swap (If RAM is low)

Use TensorRT for Maximum Performance

For TensorRT-optimized models, install:

Then use TensorRT-LLM from NVIDIA:

Run:

@Rodney, We will be releasing the STL files but not the CAD files.