Using Openai GPT4 To Control A Robot Arm

We've been working on a few things using Open AI's API, such as navigating any robot around any environment and avoiding obstacles with only a camera (no lidar or other sensors). We also had a few other tests to move a robot arm, but I wanted to share what you expect in 2024 out of Synthiam ARC. We're publishing this as a Synthiam ARC Robot Skill, but in the meantime, we're trying various language syntaxes - which we've made decent progress with. I will try a few other things to optimize tomorrow, but as you can see, it works pretty well!

Controlling a Robot ARM with Open AI GPT-4

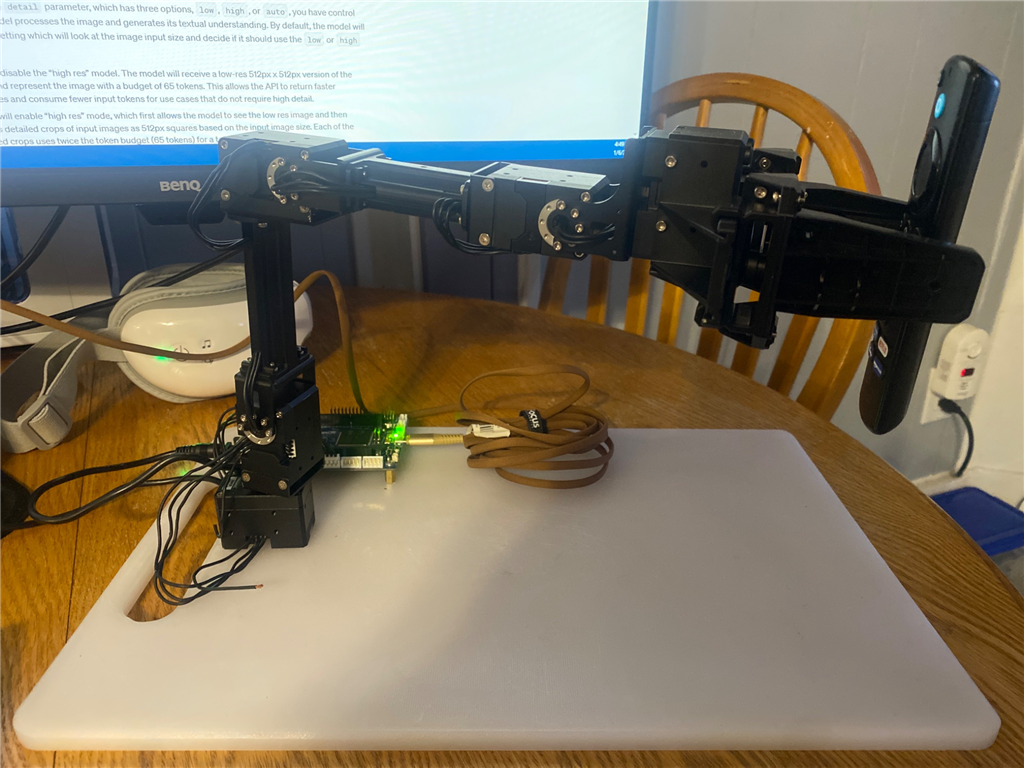

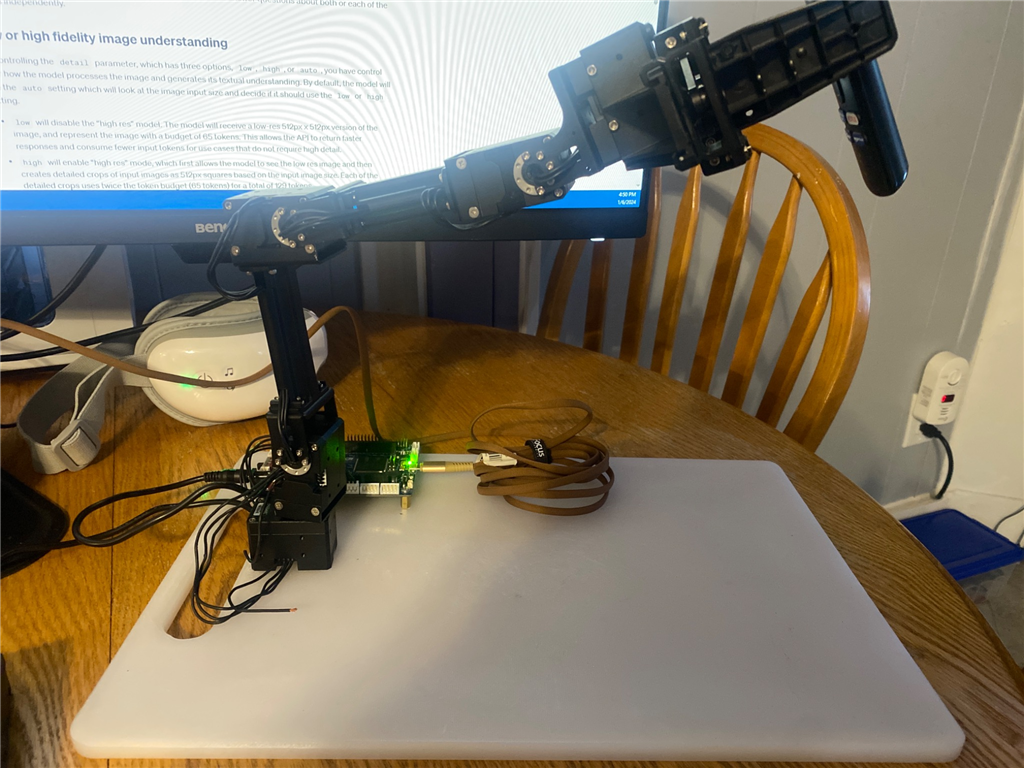

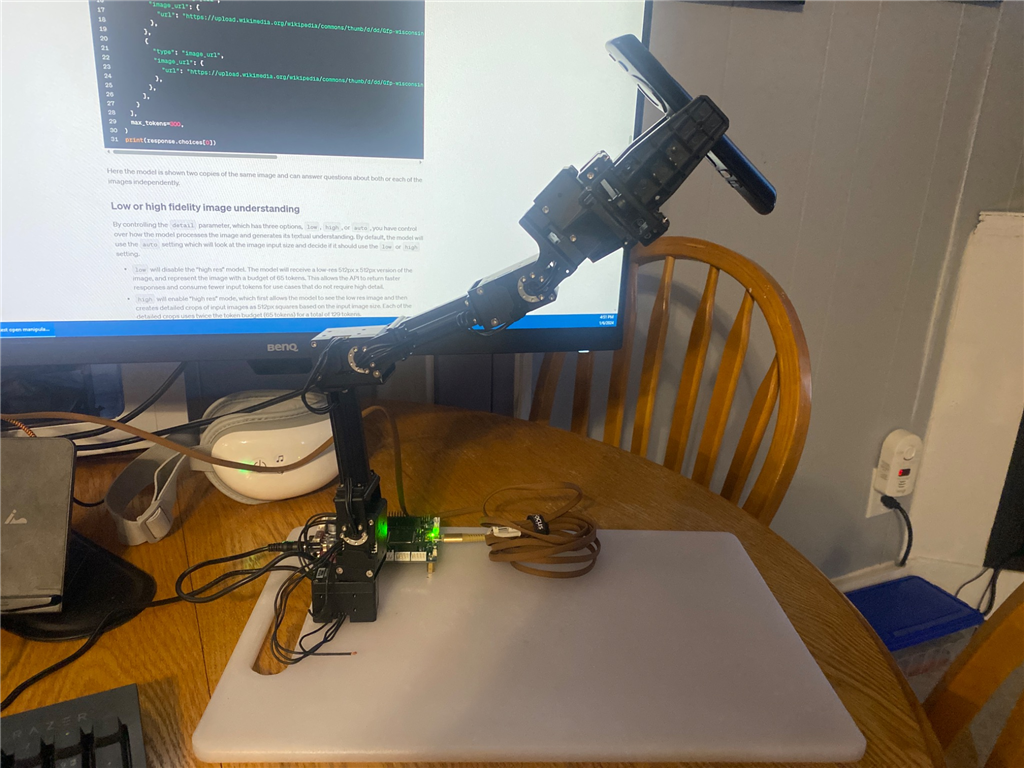

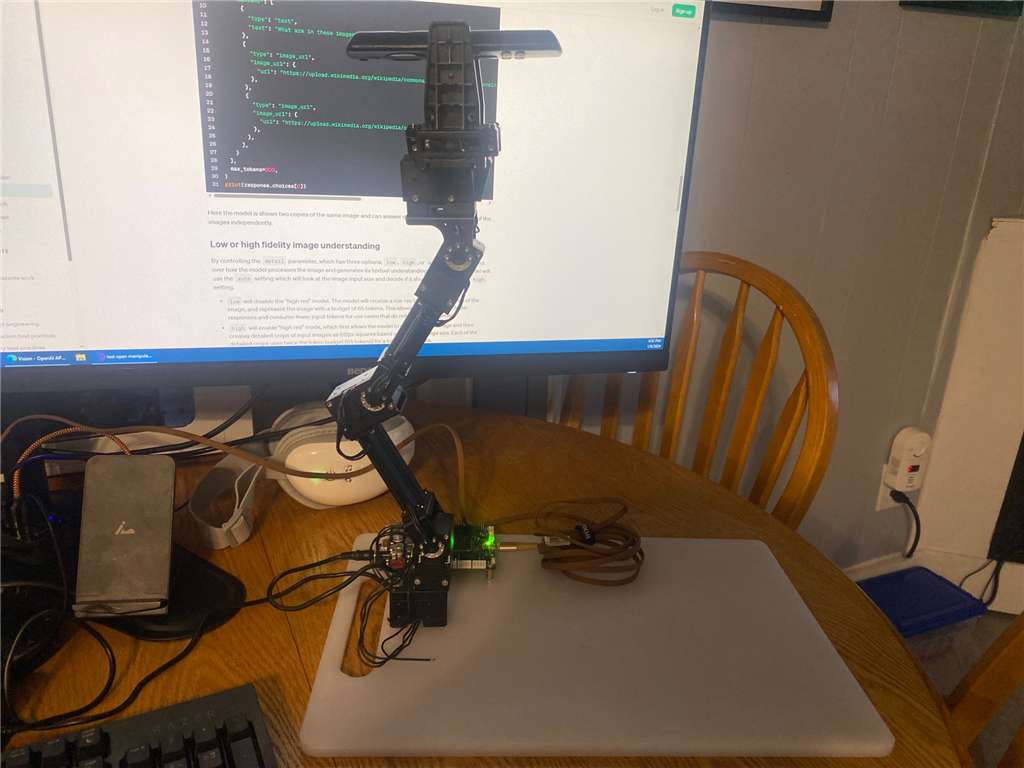

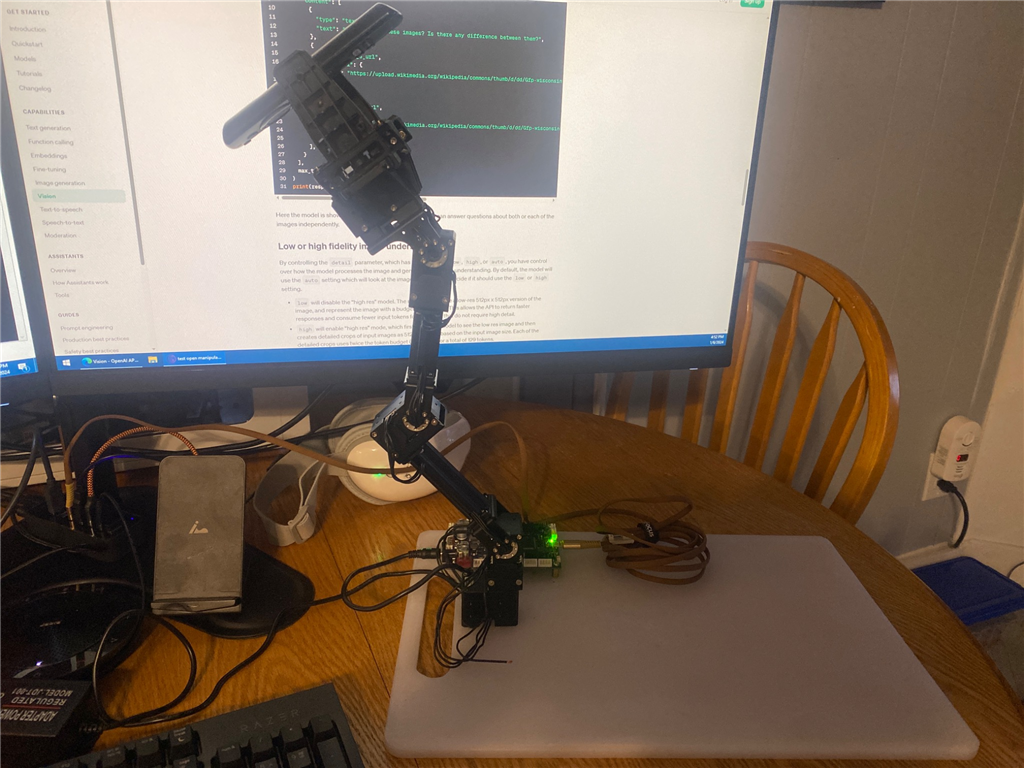

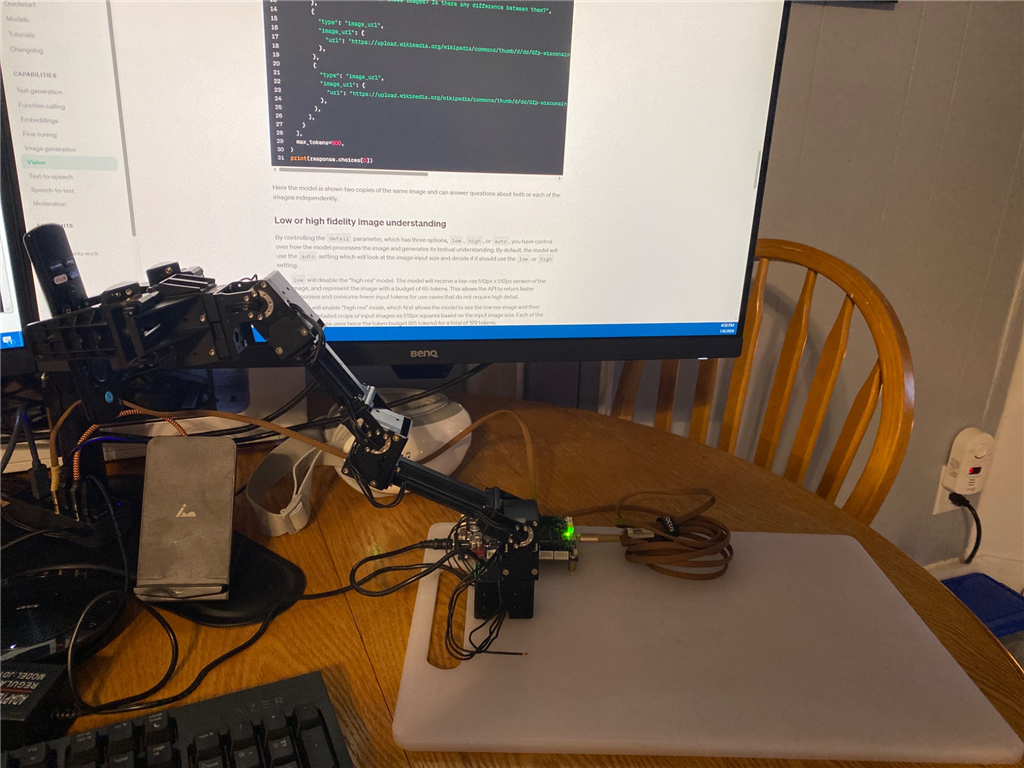

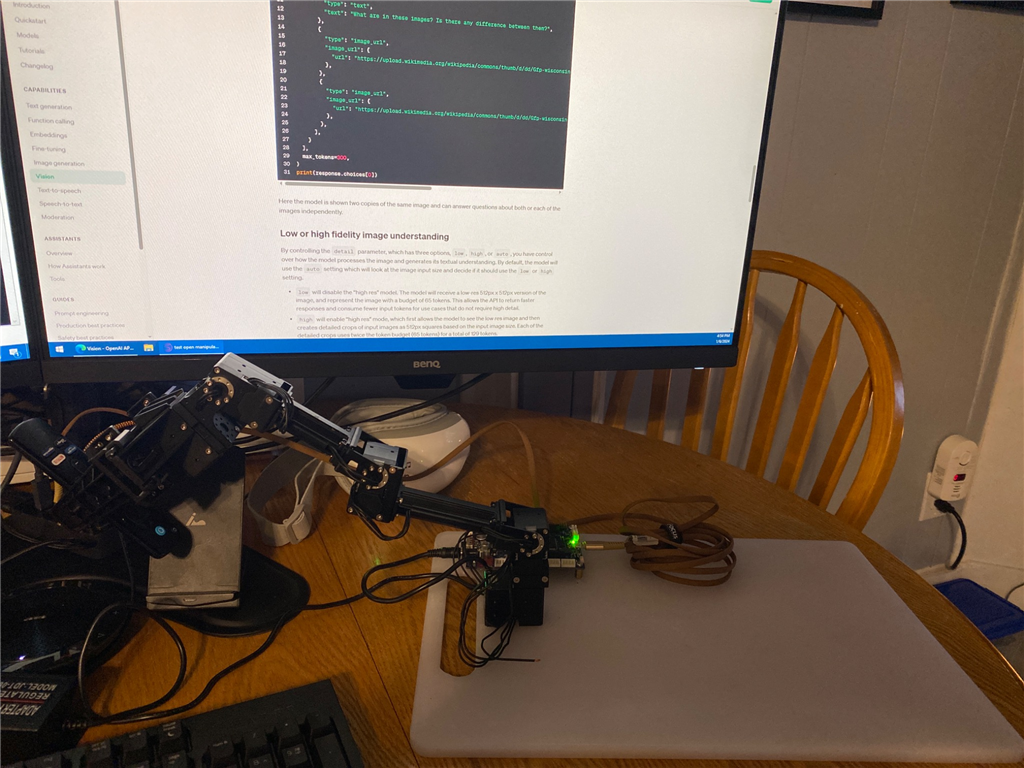

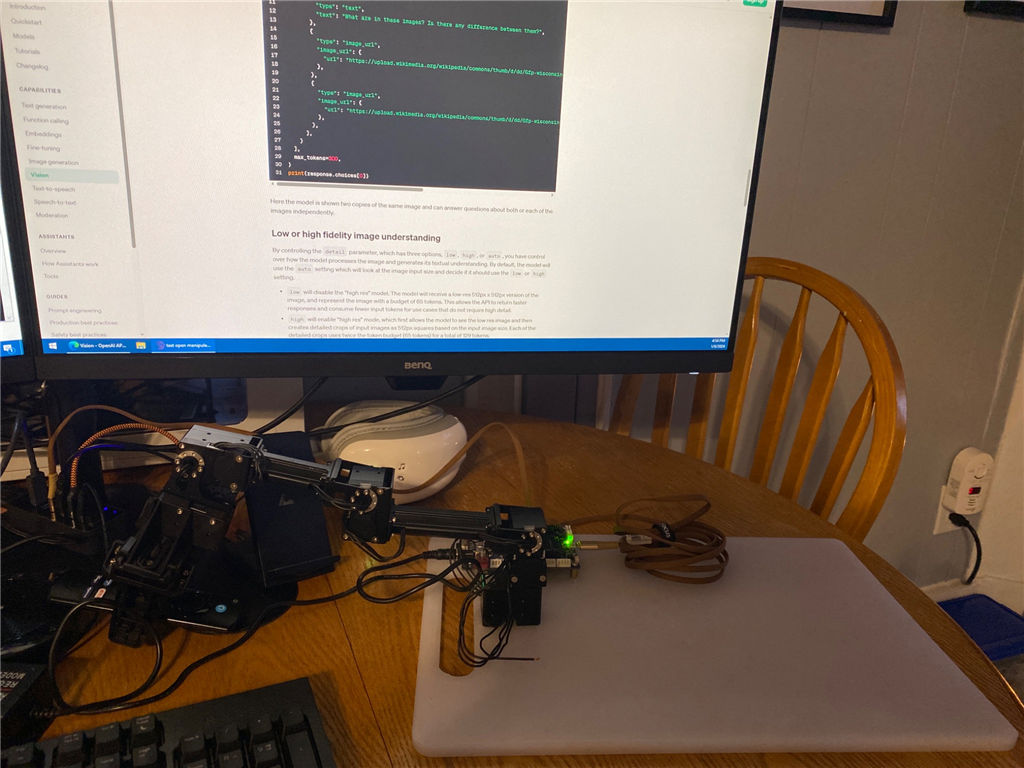

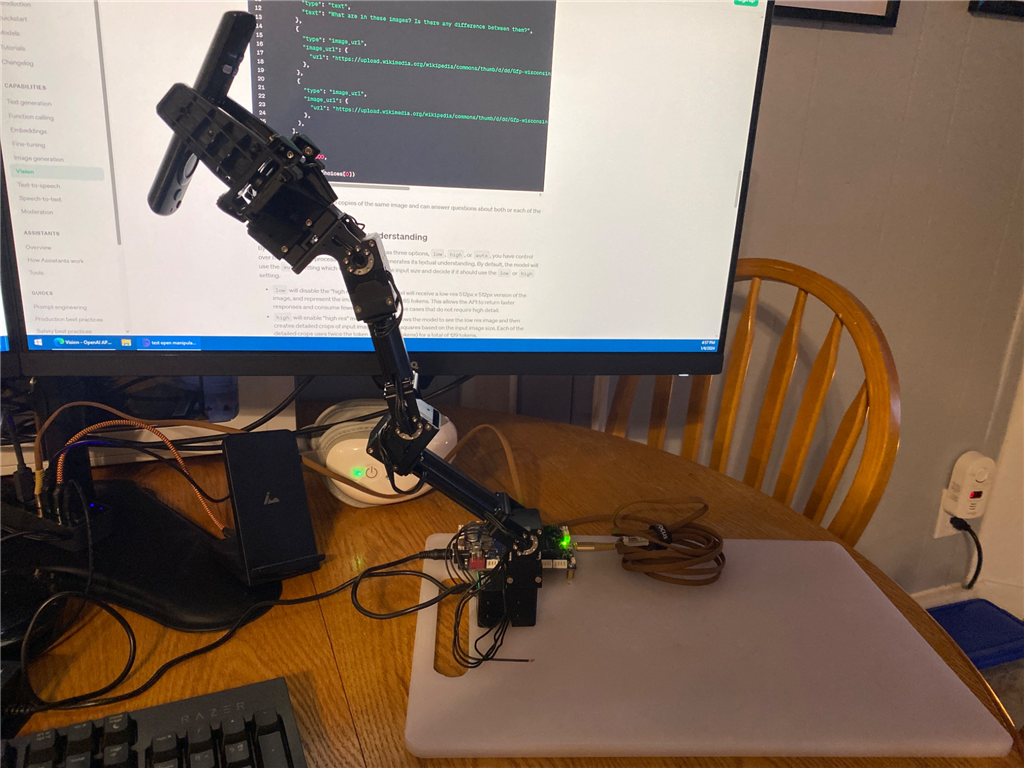

Here's how it works. With a camera mounted facing the robot arm and environment, you configure each servo and position. That information is formatted into instructions that GPT-4 understands. Initializing provides the angles of each servo, the direction the servos move, the range of the servos, and an image of the arm in the initialization position. Once you provide a goal, the GPT will incrementally move the robot, take a photo, and repeat. This process continues until the robot has reached the desired goal or cannot reach it. The GPT will control the servos and alter the positions based on the updated images. Choosing a camera location that properly captures the area is important so the GPT can understand the effect of increments.

I'm using a Robotis Open Manipulator/X robot arm in this test. The robot is controlling 4 of the 6 servos. The 2 remaining servos for the gripper I am manually opening and closing because the robot cannot see the gripper with the camera angle.

What you see in the log below are the individual images that the GPT is used to process and the suggested servo positions. It also includes dialog commentary from GPT on when it has achieved the goal or if it runs into issues.

The goals that I have inputted into the GPT are bolded in the log below.

The Power of Visual Feedback

Our test subject, the Robotis Open Manipulator/X arm, is an exemplary model of this technology in action. We've configured it to control four of its six servos based on visual input and detailed instructions formatted for GPT-4's understanding. This system allows the robot to "see" and adapt, much like a human would. It's not just about following commands; it's about interacting intelligently with the environment.

Here's what's particularly exciting

- Real-Time Adaptation: As the camera captures new images, GPT-4 updates its strategy, ensuring the robot's actions are precise and responsive.

- Complex Decision-Making: The AI's dialogue commentary offers a peek into its thought process, showcasing the sophisticated algorithms at work.

- Manual Intervention: For elements like the gripper, manual control adds a personal touch to the robot's movements, blending human intuition with AI efficiency.

Activity Log

This is the activity log and the associated images for each frame with Synthiam ARC and the GPT Kinematics robot skill. A goal is given by the "user", and the GPT responds with new servo positions. A new image of the robot arm position is captured and submitted to the GPT. As you can see by this log, the process is repeated until the goal is achieved.

Image

The goal is to move the end effector to the remote control.GPT v11: 175, v12: 185, v13: 185, v14: 185

Image

GPT v11: 170, v12: 190, v13: 190, v14: 190

Image

GPT v11: 165, v12: 195, v13: 195, v14: 195

Image

GPT The end effector appears to have reached the remote control. Would you like to attempt a different movement or have any other requests for the robot arm?

User Let’s pick up the remote and place it opposite from where it was. The remote is in the gripper now.

GPT v11: 160, v12: 180, v13: 180, v14: 180

Image

GPT v11: 165, v12: 170, v13: 170, v14: 170

Image

GPT v11: 170, v12: 160, v13: 160, v14: 160

Image

GPT v11: 175, v12: 150, v13: 150, v14: 150

Image

GPT v11: 180, v12: 140, v13: 140, v14: 140

Image

GPT v11: 180, v12: 130, v13: 130, v14: 130

Image

GPT v11: 180, v12: 120, v13: 120, v14: 120

Image

GPT v11: 180, v12: 110, v13: 110, v14: 110

Image

GPT v11: 180, v12: 100, v13: 100, v14: 100

Image

GPT It appears that the end effector has reached close to the table surface. To complete the placement of the remote control, you might need to open the gripper. Would you like to proceed with that or adjust the arm's position further?

User let’s place the remote back to where we picked it up

GPT v11: 180, v12: 130, v13: 130, v14: 130

Image

GPT v11: 180, v12: 170, v13: 170, v14: 170

Image

GPT v11: 175, v12: 180, v13: 180, v14: 180

Image

GPT v11: 170, v12: 190, v13: 190, v14: 190

Image

GPT v11: 165, v12: 200, v13: 200, v14: 200

Image

GPT The arm is positioned over the table, but the remote needs to be lowered to the surface. Please adjust the angles for precise placement.

v11: 165, v12: 180, v13: 180, v14: 180

Image

GPT The end effector is positioned above the original location of the remote.

v11: 170, v12: 190, v13: 190, v14: 190

Open the gripper and set it open if a separate servo or command controls it.

Very cool. I look forward to watching the progress.

Hey @athena can I use this to feature to tell my robot to get a beer from the fridge and bring it to me?

Yes, you can use Synthiam ARC's capabilities to program a robot to retrieve a beer from the fridge and bring it to you. However, this task involves several complex steps that require a combination of different robot skills, hardware components, and programming logic. Here's a high-level overview of how you might approach this task:

Robot Hardware: Ensure your robot has the necessary hardware, such as a mobile base for navigation, an arm with a gripper for picking up objects, and sensors for obstacle avoidance.

Movement and Navigation: Use a Movement Panel robot skill to control the robot's locomotion. You may need to integrate mapping and navigation skills to help the robot find its way to the fridge and back to you while avoiding obstacles.

Manipulator Control: Use the Inverse Kinematic Arm robot skill to control the robot's arm and gripper. This skill will allow you to calculate the necessary joint angles to reach into the fridge and grasp the beer can or bottle.

Vision and Object Recognition: If you want the robot to visually identify the beer, you can use the Camera Device robot skill with object recognition capabilities to locate and identify the beer can or bottle in the fridge.

Task Sequencing: Create a script or use Blockly to sequence the tasks the robot must perform, such as navigating to the fridge, opening the door, identifying and picking up the beer, closing the door, and returning to you.

Human-Robot Interaction: If you want to initiate the beer retrieval with a voice command, integrate the Speech Recognition robot skill to listen for your command and start the task.

Testing and Safety: Thoroughly test the robot's performance in a controlled environment to ensure it can safely and reliably complete the task. Consider adding safety features to prevent accidents.

Keep in mind that this is a non-trivial project that requires careful planning, programming, and testing. Additionally, you'll need to ensure that the robot's hardware is capable of performing the physical tasks involved, such as opening the fridge door and handling a beer can or bottle without dropping or crushing it.

If you're ready to take on this challenge, Synthiam ARC provides the tools and flexibility to create custom robot behaviors and integrate various robot skills to accomplish complex tasks like this one.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

@Nink, Athena is unaware that this robot skill exists because it is not yet public. So, she'll have difficulty comprehending the question given that she doesn't have context about this Synthiam ARC robot skill.

WOW!

I guess all these robots that people are building with arms can finally take advantage of them.

The idea of navigating with just a camera is what interest me the most.

You certainly have come a long way from the first ARC!

lol

Roll On!

Interesting. I love that you're working on this.