My robot would be sitting at a table, and it would look down at the objects on that table. It would be able identify the objects on the table: salt, pepper, a can of beer, a knife, a plate a spoon, and carrots on the plate. It would also know the coordinates of each object in 3D space of each object. Knowing that it would reach out and pick up the beer. I think Tensorflow can do some of this already. Microsoft Cognitive vision gives you the objects it sees, but not the locations.

Other robots from Synthiam community

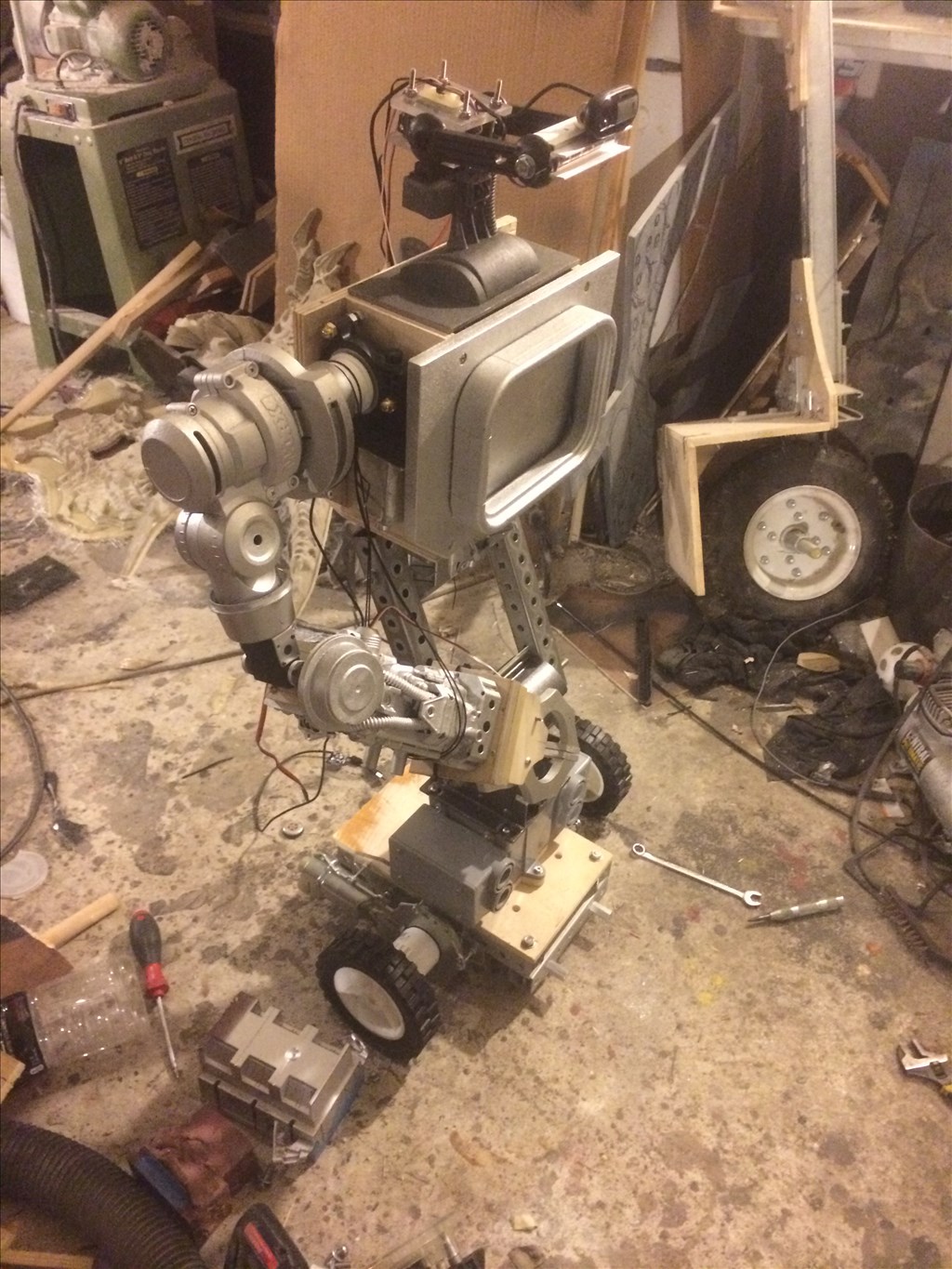

Doombot's Dirgebot V1

Dirgebot: 3 ft 4 in kid-friendly robot prototype with V3 board, webcam and W3 Iconia; seeking Synthiam ARC programmer...

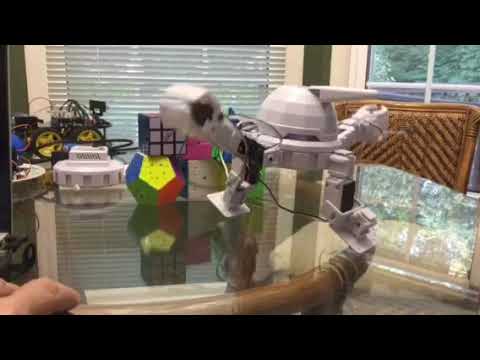

Ezang's My Tightrope Robot Without The Squeak, Sorry For The...

Tight Rope robot without the squeak - enjoy a smooth, silent balance performance

Ezang's MEASURING DISTANCE TO OBSTACLE USING THE HC-SR04

Measure distance in inches with HC-SR04 on Arduino using Synthiam ARC serial monitor; converts pulse microseconds to...