Asked

— Edited

Hey everyone. It’s the time of year I start hosting live hacks. I’d like to know your thoughts on subjects to cover. I have a list below and will add to it with your feedback. What are your thoughts?

Hacks subjects - SSC-32

- servo pcb control hbridge

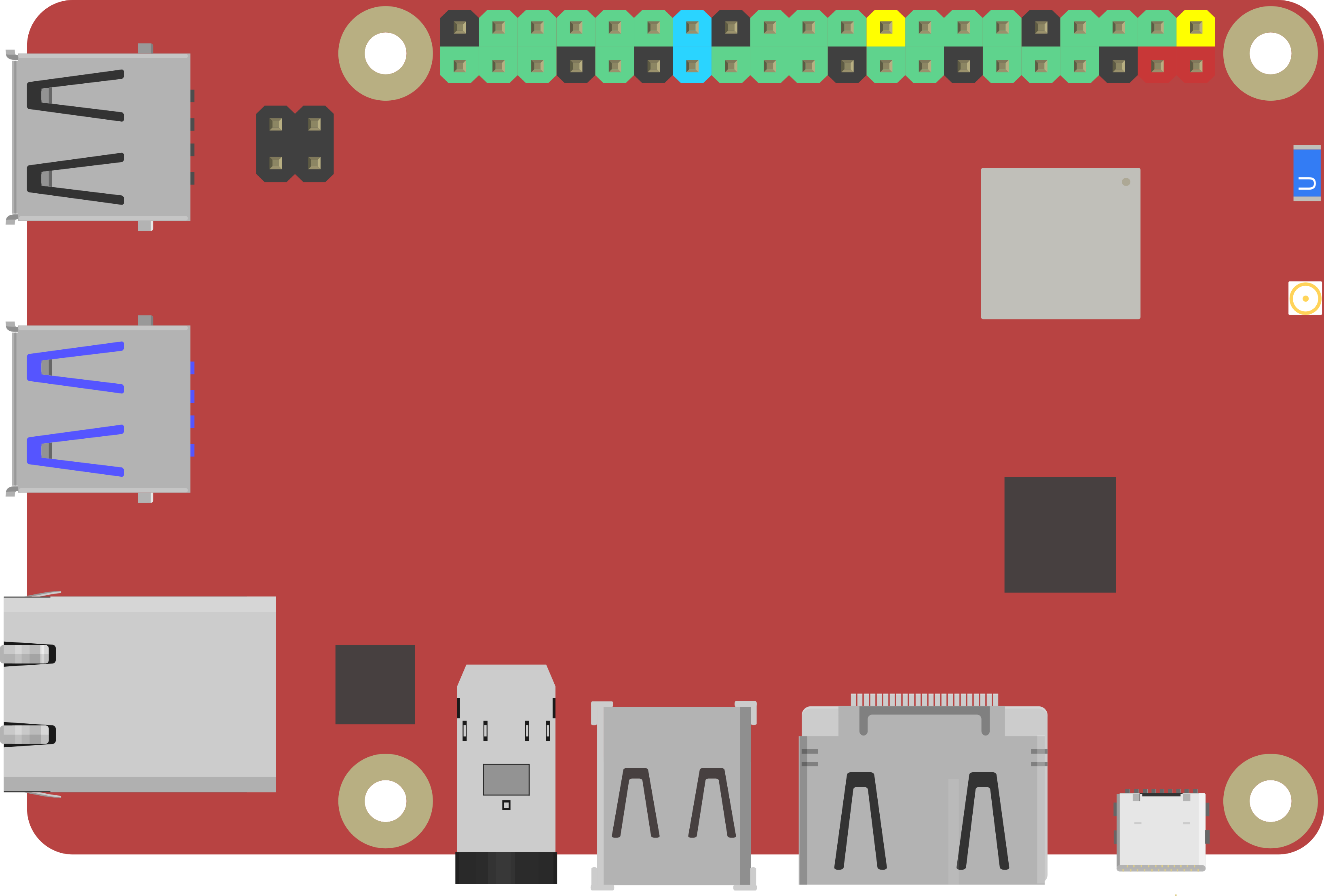

- rock pi x

- Servo Camera server with unity

- navigation/slam discussion (what sensor options are available, what do people want out of navigation, etc)

- exosphere telepresence option

- making a robot skill

- making a movement panel

- tensor flow & yolo object detection

- IPS with Glyph

- intel realsense tracking (T265)

- neat-o botvac lidar hack

- databot review

Related Hardware (view all EZB hardware)

Rock Pi X

by Radxa

Control robots with Synthiam ARC on ROCK Pi X: affordable x86 SBC running Windows, Z8350 quad-core, 4K HDMI, Wi‑Fi, drivers and setup tips.

Wi-Fi / USB

Servos

✕

Camera

Audio

UART

✕

I2C

ADC

✕

Digital

✕

The roombas come heavy with good traction wheels and encoders,so you experts are saying that even if I buy the Cheaper 360 Lidar systems,it won't really be able to make simple maps that can store on a pc like Latte panda? I am sure they come with coding samples to use on Arduinos or Python ,slower for me to learn than Synthiam ARC samples. They are only used as obstacle avoidance? I sure hope DJ does get something that maps nicely.or else just keep using sonar.

@DJ: I was thinking about the IPS idea and came across a cheap way of doing so, it is cald "aruco marker navigation". As i understand it, it can be used with single or multiple markers. The markers give x,y,z coordinates. Take a look here: https://www.youtube.com/watch?v=JuyzlFzVbbE But i am still confident that the realsense is the best option for navigation. Let me know what u think guys.

Yeah the glyph marker can be used right now. The camera robot skill actually provides the rotation (in degrees) of the detected glyph. So it would be very easy for you to make that happen. You'd never need to "guess" the direction of the robot because it would be always known. Some simple javascript would make that work easily.

You'd be limited to the distance with the camera and a glyph, however. Mainly because the further you get the larger the glyph will need to be. In a small home room, it wouldn't have to be huge. But it would be interesting for testing non the less.

Oh - i should also add that glyph IPS is how 2 of synthiam's exosphere robots work. The robots have glyphs on top of them to know where they are and when the task has been accomplished. Then it uses that glyph to locate the robot into a random starting area for the next user.

The aruco markers give 3 axis and the camera must be calibrated. This way the algorithm knows always the distance and location to the center of the camera. https://www.youtube.com/watch?v=BeI7DZxPadw

Are you asking for aruco marker detection rather than using the existing glyphs for this usage?

There is a little startup in Calgary called TakeMeTuit that do location tracking. I met with CEO and his team and they track location using speakers and the microphone in a mobile phone. They can get accuracy within a few centimetres in a 3 dimensional location so much better than Bluetooth or indoor magnet field detection. I have been meaning to talk to them about using their tech to track robots locations but if someone wants me to set up a meeting I can.