vincent.j

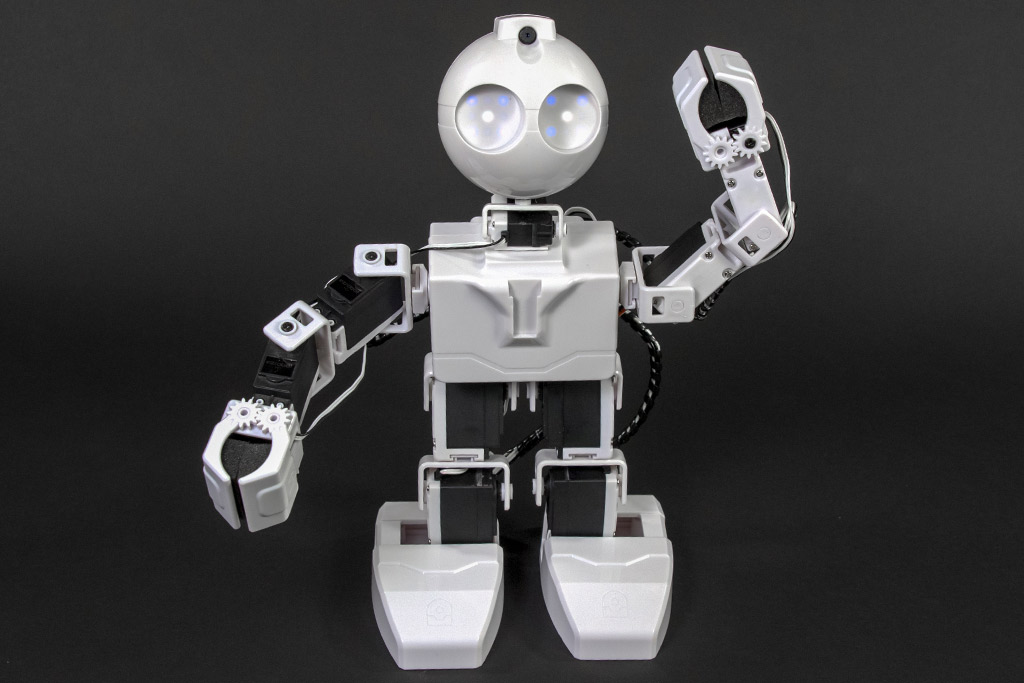

Would anyone have suggestions on how best way to go about interacting with the JD robot via flashcards and/or objects? The aim would be for the user to give some sort of meaningful visual response that JD can interpret and process.

QR codes are nice but they can be quite large particularly if the robot is further away. The only way I can think of keeping the codes small while maintaining the distance is to use a higher resolution camera but I'm unaware of any such cameras that are also compatible with the EZ-B v4. Glyphs also work but I think there are only 4 of them. If someone knows of a way to add more, that'd be great. Color detection can be noisy especially when the background colors also interfere. Object recognition is quite tedious and inconsistent as the object often needs to be angled and oriented a certain way to be detected. Providing ARC with a set of training images would be great but I don't know of any ways to do this or if it's even possible.

Each have their pros and cons but I'm keen to hear what your thoughts are. Are there methods I haven't thought of or better ways to go about detecting responses?

Related Hardware (view all EZB hardware)

Related Robot Skills (view all robot skills)

Use the object training. If you’re having challenges with angles and such for recognition, the machine learning training isn’t being performed correctly. Machine learning of all types requires human training to teach the machine. The cards you use will have to be taught at variety of angles. This means slightly angling the object as its being trained.

You will I’ll find that like anything, practice will overcome the challenges you face

Lastly, you have already discovered "programming with pictures" because I see the control selected in your question. That’s a proof of concept which demonstrates your question. However, the challenge with that control is the images are too similar for direction when rotated. A more reliable implementation of that control would be to use different cue cards for motion that have a higher degree of visual differences.

heres the link to programming with pictures: https://synthiam.com/Software/Manual/Programming-with-Pictures-16024

ill publish the source code tonight when my plane lands and anyone can modify the code to use better images

ps, thank you for selecting relevant skill controls to your question. It really helps keep things organized

Thanks for this. I guess I'll have to train myself to train the robot

Using cue cards like the programming with pictures plugin is basically what I'm going for. I'll keep note to use visually different cards for better results.

Thank you for the link and yes please, the source code would be awesome.

You're welcome, I'm glad it helped

Has the source code been uploaded yet? The following link doesn't seem to be working - https://github.com/synthiam/Behavior_Control_Programming_with_Pictures