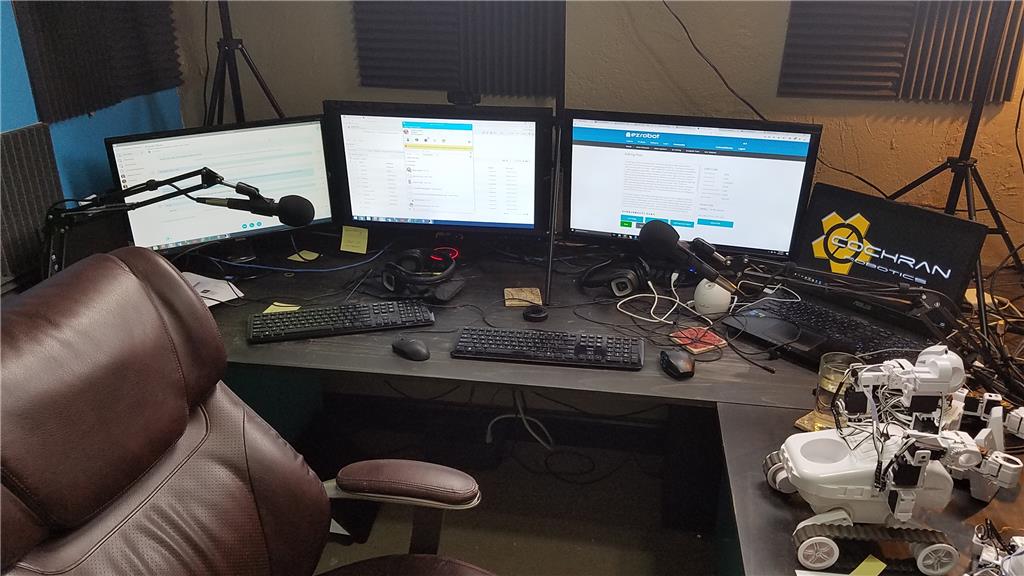

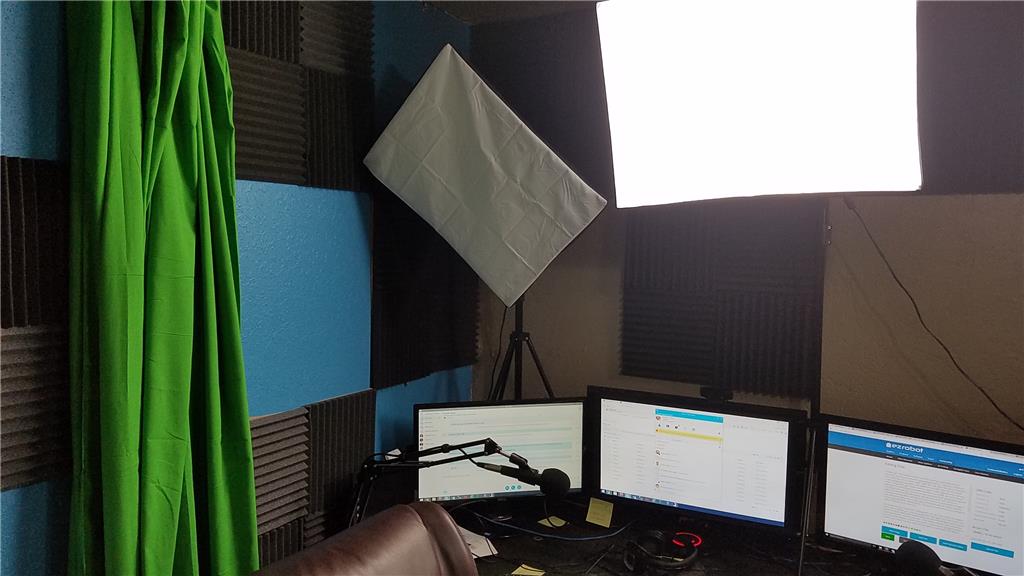

A while ago I had mentioned that I would be starting a video podcast that functions as technical classes for homeschool students. I had also mentioned that I would go all in if I did it. Well, I went all in. My office has been converted to a video podcaster's paradise. Last weekend I was able to get the mixer, compressor, gate, Audio interface, green screen, dynamic mics, cameras, lighting and sound dispersion panels installed in my studio. My hosts (Tanner and Stori) both have a JD now and are learning about them by going through the LMS curriculum that was developed. We will start some "test" shows on Thursday evenings which will help the hosts to be more comfortable in front of the camera and allow them to get really comfortable with the robots and the technology behind the video podcast. The podcasts will be live so you will get to see all of the fun if you want to

On another project, I have been working with a group in the middle of nowhere Texas that is setting up a ranch for people who have aged out of the foster care system in Texas. Next week I will be setting up their firewall, NAS, domain server, some workstations, network and such. They will be using the class mentioned above for their students to teach them about robotics on the EZ-Robot platform. I want to do a show from the ranch but the logistics could make that difficult.

I will post the links to the video podcast streams later. We will be streaming on Youtube and Twitch. We also will have the RTMP feed available to be viewed directly. We have a mumble room and IRC channel that I will publish also. These allow participation in the discussion that is happening and in the show. Along with that, 662-4ROBOT1 is a direct phone number into the show, and Skype is also available. If you want to check out our early tests, I will post the links later. The official start is on May 6th 2017. We will be broadcasting from our booth at a Homeschool show in Oklahoma City.

I want to also do a show on Saturdays on more generic topics but all relating to technology. Nicholas (my son) will be teaching an Intro to Programming class. The next semester we will move up to intermediate programming and robotics classes.

SM@RT Club is the Patreon support page for the classes. I still have some work to do on it, but it is there at least.

I guess I still need to clean up some cables...

@David... There is absolutely no issues with volume in this or any of your other videos... Not to state the obvious but PCs and mobile devices still come with volume adjustment last time I checked... I just don't understand... If you can't hear something, turn up the volume?...

Hey, I am just watching the newest episode right now and must agree, the volume is all good....plus it is a great show, Doombot is awesome and the Latte Panda review is revealing!

I had trouble on some previous shows, and yes I had them running on my phone volume all up....as it seems to be fixed right now it's all good to go!

Hi David, This is your off-site Production Critic.... LOL ... Sound seems good, Video is good, Production is good. ... .... Keep doing what you are doing ! Don't change anything !

Thank you guys for the feedback. After the conference that I went to a few weeks back, I setup everything and spent some more time on getting the volumes louder. This is a tricky thing because there are volume settings in applications, volume settings on youtube, volume settings on the device you are watching back on, volume settings on the amp that is going into the audio interface, volume settings on the audio interface, volume settings that are used by the app that I use to stream and record, a compressor which affects volume, the mixer which has a compressor and about 4 different volume settings (2 for each device plugged in and 2 for the overall volume). Setting any of these too high can blow out the audio and make it really poor quality. Sound is a delicate balance of all of these settings. Hopefully it was louder and still good quality with the last show. It seems to have been.

On the background, I tried to pick something that wasn't too busy. When I look at something technical, I start to evaluate it to see how it works or if it would work. This takes my focus off of where it is meant to be. I chose this background because it wasn't technical and was pleasant and calming to me. It doesn't have a lot of things going on and doesn't distract too much I don't think. It also works well with the green screen. If you look around my head and body, you don't notice that it is a green screen. This is because it isn't a flat color or short distance image. It has depth and because of this, people don't even think of it being a green screen. This is a technique that I picked up from a podcast that had run for 10 years and had a lot of success. He too made it clear that it was a green screen and switched it up sometimes. A couple of shows he used an image that looked like he was in a shack used to sell crack and the audience appreciated his humor. A lot of what I do is based on this show and its success. Sadly, the last podcast of this show aired and it is no longer being produced. They do have many other shows, and some of them use a green screen and some don't, but their success started and grew from this one show and spread into many more. Like them, I will switch it up from time to time. I had plans to switch it up on key episodes to celebrate those shows, like show number 10 or 50 or 100 and so on.

@Mickey666maus I want to get back to api.ai to see what can be done with the plugin that DJ wrote. In essence, all the plugin would be doing is taking text and sending it to API.AI (or it could be taking audio and sending it to API.AP for them to convert it to text to use but I doubt it). From there, JSON is returned and parsed placing it into variables. In API.AI you have the ability to do a lot of things. With the variables that are returned you have the ability to do a lot of things. The plugin would be just the part that takes what is sent and received from API.AI and makes it usable on both ends. We spent a lot of time in API.AI making things based on the text that is passed to it, and then making determinations based on what is returned from it as to what to do with the text. I plan on digging back into this in the future, but I really don't have a timeline on it right now. Getting the other two shows up and going will probably consume a majority of my free time that isn't already being consumed until August or so. I will gladly share what we have in API.AI at that point in time. It is a powerful tool but you have to understand the tool to use it effectively.

We had ours acting as the initial source of information for things like "What is the forecast for Tuesday?" When people asked a What Is type question, we would go to Wolfram Alpha with the request simply because we wanted the most thorough answer to be returned. This causes issues with questions that people understand but machines don't. Things like DJ pointed out in our trip to Canada last year of "What is the Weather?". This caused our engine to give him the definition of Weather and not the forecast. This would have to be handled differently as it is a valid question and the user wouldn't get the response back that they were expecting. This would have caused us to have to write something that would allow the user to then notify us that the question didn't return something in the right fashion so that we could then program for that.

Anyway, I am a little behind on API.AI right now but will be catching up by the end of the summer hopefully. Keep up the good work!

Thanks David

@CochranRobotics I totally agree...api.ai is such a powerful engine, it's great to have such a thing to use in our robots! Also the plugin is super simple to install and works like a charm!

You could also take the route of having api.ai return the entity variable results and build the logic in ARC...there are a lot of ways to get stuff done!

I guess they also changed a buch of things...the problem with solving logic that requires certain parameters to work could be to specify them to be required...so there will be a promt for each required parameter! Eg in the weather example the required parameter would be at least the context plus a geo location! If the geo location is missing it would then ask the user to define the location first!

Thank you for your advice and keep up the show, it's such a great thing.... I must say I really enjoy to see the faces of those people that I have been following around on this forum for a while!

This is such a great community! Thanks @all

edit I was reading this again, and realized you were already way past this and took the stuff that could not be handled that way one step further in addressing Wolfram Alpha...

Any previews of what' being discussed on this week's show?

I think I will start with a "Why do I do this show" segment. I think then I will go into articles about Microsoft working on porting Windows 10 to ARM processors by the end of the year maybe. Then I will go over my build of the InMoov so far and finish off with "How to use the Omron HCV-P within ARC".

There is a request from another InMoov builder for the last topic. I know that you too have this and there has been some other discussion on the forum on this topic.

Also, I think I will give my final thoughts on the Latte Panda before I mount it in my InMoov (and get yours shipped).

Maybe throw out some subjects your viewers can give feedback on for future shows or discussions ?