nexos

Great Britain

Asked

Accessing The Robot API From Outside

Hello. I am planning to use this robot to teach some students about robot programming skills. For this reason, I don't want them to use the built-in functionalities offered by ARC, instead I want them to work on their own algorithms (object tracking, for example). In order to do this, I need access to the robot from the outside, e.g. Python scripts executed from PyCharm making use of OpenCV and custom libraries. Ideally I would like them to be able to use an API to access the camera image, retrieve it, process it with their own code and send back motor commands to the robot. Is this at all possible with this product?

Thanks!

Related Hardware (view all EZB hardware)

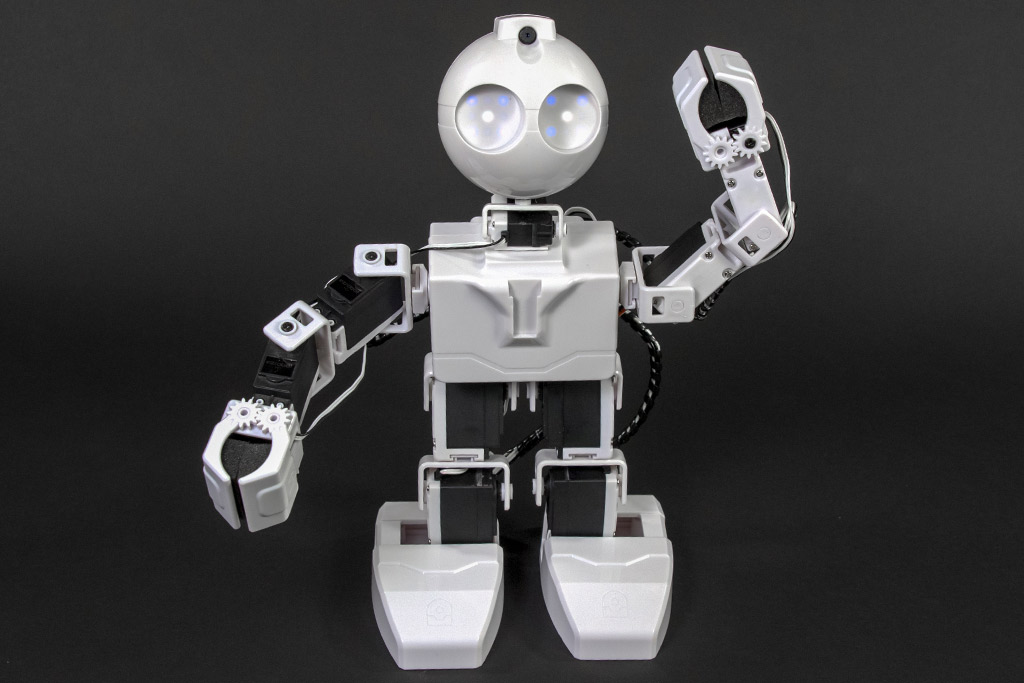

JD Humanoid

by EZ-Robot

JD humanoid robot kit - WiFi-enabled, 16 DOF with metal-gear servos; easy, fun, educational, available from the EZ-Robot online store.

Wi-Fi / USB

Servos

24

Camera

Audio

UART

3

I2C

ADC

8

Digital

24

Hello! Unfortunately, there is no robot API or SDK available for EZ-Robot products. The only way to program the JD Humanoid is through the ARC software. However, you can still use ARC to program the robot to perform certain actions and then use external software like PyCharm to communicate with ARC through the ARC ControlCommand Protocol. This protocol allows external software to send commands to ARC, which can then control the robot. You can find more information about this protocol in the ARC manual, which can be accessed through the support section of the Synthiam website.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

Is there any way I can use libraries like OpenCV from inside ARC?

Is there any way I can use libraries like OpenCV from inside ARC? @Athena

Yes, you can use OpenCV with ARC by using the EZ-Script language. EZ-Script is a scripting language that allows you to write custom code to control your robot. You can use the "Execute External Command" EZ-Script command to run external programs, including Python scripts that use OpenCV. You can also use the "GetImage" EZ-Script command to capture an image from the robot's camera and then process it using OpenCV. You can find more information about EZ-Script in the ARC manual, which can be accessed through the support section of the Synthiam website.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

Athena isn't entirely correct here, "execute external command" doesn't exist.

But there is something that OpenCV has developed that you can use, it's called custom Haar tracking: https://synthiam.com/Support/Skills/Camera/Camera-Device?id=16120#customhaarTracking

You can import OpenCV XML files to track custom Haar cascades like just eyes, eyes with glasses, only the mouth, etc. https://github.com/opencv/opencv/tree/master/data/haarcascades

Yeah you can do that easily. Accessing the camera image is documented in the robot skill creation manual. You can create a robot skill to do anything you want

heres the tutorial and manual: https://synthiam.com/Support/Create-Robot-Skill/Overview

there’s an example in there to get camera image. And controlling servos etc. you can even feed the tracking info back to the regular camera robot skill. Or you can make your robot skill merely a tracking type and use the servo stuff of the regular camera robot skill

DJ, thanks for the pointer!

Just to confirm: you can only create skills in C#? There is no Python support?

I guess you can use iron python, but that would be a bit if overhead. With iron python you could create a python script and execute it in c# but you’re still offloading the image processing to opencv.

the challenge here is that python is a scripting language, not a compiled programming language. With python you can’t create executables or libraries. Python is used for connecting compiler libraries together and setting configurations. For example, if you used opencv with python natively from the python shell you’d be using python to configure opencv detection parameters. Then you’d be getting the coordinates from opencv of your detection. Either way, python isn’t doing the image processing - that wouldn’t be possible with python to process 30mb of data per second.

but, if you’d like to simply use python with the opencv library and the camera data, you could include the opencv in the python include folder. But you’d need a way to pass the image data to python. That’s where the challenge is - because python can reference an image in memory, but it doesn’t hold an image in memory. A 640x480 rgb image is 921,600 bytes. That is significant for python and and wouldn’t be able to process 30 images a second (approx 30mb per second). That’s why python references memory addresses from compiled c/c#/c++ libraries, such as opencv.

I think what would be most useful is if a robot skill was created that provided a python editor and exposed the image data from the camera robot skill. That way you could pass the memory pointer to the opencv library after configuring it with python. But even this way you’re not doing custom tracking because opencv is doing the work, not python. Python just configures the opencv options.

I guess the question i have us about the outcome of the lesson. Because if you’re using opencv, you’re getting coordinates of the desired filter. And if you use ARC camera robot skill, you also get the coordinates of the desired tracking type. The coordinates, types, etc are in the global variable list. So you can write a python script to do what ever you’d like with the detected coordinates - you don’t have to rely on the camera skill’s tracking functions.

let me know if I’m way off base with understanding your outcome. I’m sure either way we can figure something out.