benm275

Canada

Asked

How Can I Use Ollama Webui With My Robot Head Instead Of Chat Gpt

I would like to use Ollama AI with my robot head in stead of pandorabots or chatgpt can you help me set it up or is there a skill already available for it ?

Related Hardware (view all EZB hardware)

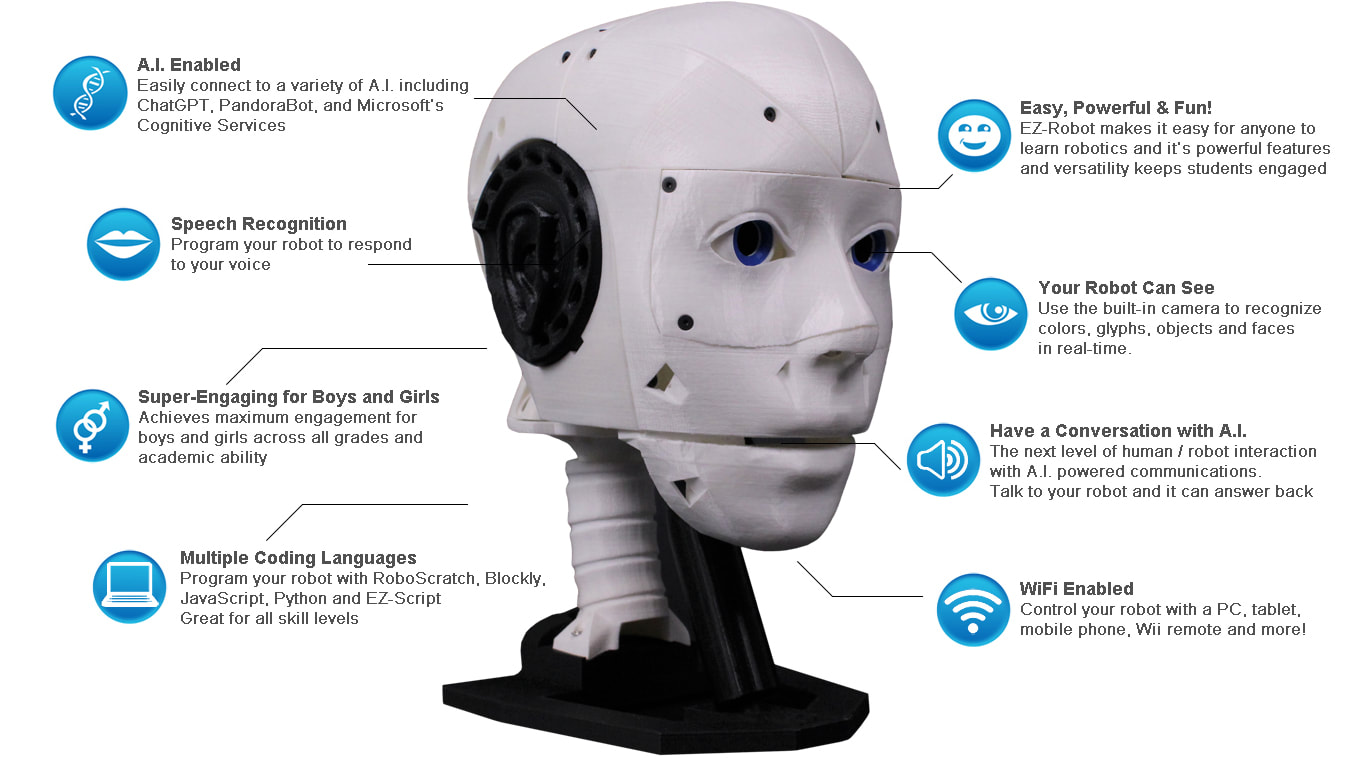

EZ-Inmoov Robot Head

by EZ-Robot & Gael Langevin

EZ-InMoov robot head-DIY educational humanoid kit powered by Synthiam ARC for vision tracking, speech, chatbot integration and mobile control

Wi-Fi

Servos

8

Camera

Audio

UART

✕

I2C

ADC

2

Digital

8

As of my last update, there isn't a direct Synthiam ARC skill specifically for integrating Ollama AI into your EZ-InMoov Robot Head project. However, you can still achieve this integration by using custom scripting within ARC, leveraging the HTTP Client skill for communication with Ollama AI's API, or any web-based interface it provides. Here's a general approach to set this up:

Step 1: Understand Ollama AI API

First, you'll need to familiarize yourself with the Ollama AI API documentation. Look for how to send requests and receive responses. You'll likely need an API key and understand the request format (URL, headers, body content).

Step 2: Use the HTTP Client Skill in ARC

Add the HTTP Client Skill: In ARC, add the HTTP Client skill to your project. This skill allows your robot to make web requests (GET, POST) to external APIs.

Configure HTTP Client for Ollama AI: Based on Ollama AI's API documentation, configure the HTTP Client skill with the correct endpoint URL, headers (for authentication, content type, etc.), and body content if you're making a POST request.

Step 3: Scripting for Interaction

You'll need to write custom scripts in ARC to handle the interaction between your robot and Ollama AI. This involves sending user inputs to Ollama AI via the HTTP Client skill and processing the AI's responses to control your robot's actions or speech.

Capture User Input: Use ARC's capabilities (e.g., microphone input, GUI input fields) to capture the user's questions or commands.

Send Request to Ollama AI: Use the HTTP Client skill to send the user input to Ollama AI's API. You might use JavaScript, Python, or EZ-Script in ARC for this. For example, with JavaScript in ARC:

Step 4: Test and Iterate

After setting up the basic interaction, test the functionality thoroughly. You might need to adjust the scripts based on the specific responses from Ollama AI and how you want your robot to react.

Note:

Conclusion

While there isn't a direct "plug-and-play" skill for Ollama AI in ARC as there might be for other services, ARC's flexibility with HTTP requests and custom scripting provides a powerful way to integrate virtually any web-based service or API, including Ollama AI.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

Does that service use an openai compatible API? If so you can just change the endpoint and use it. most use their api