Asked

I want to know what algorithm is running behind the object detection and speech recolonization, by which phases the phenomena will occur kindly guide me ?

Related Hardware (view all EZB hardware)

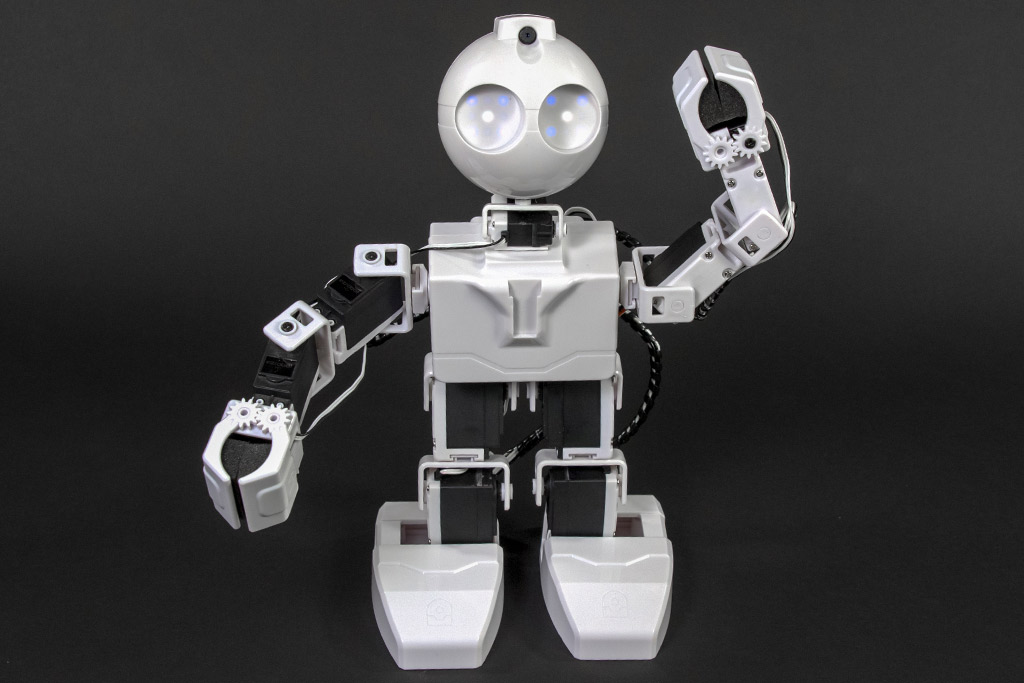

JD Humanoid

by EZ-Robot

JD humanoid robot kit - WiFi-enabled, 16 DOF with metal-gear servos; easy, fun, educational, available from the EZ-Robot online store.

Wi-Fi / USB

Servos

24

Camera

Audio

UART

3

I2C

ADC

8

Digital

24

Online speech recognition controls/plugins: Google, IBM (Watson), Microsoft (Bing/Cognitive Services)

Offline speech recognition controls/plugins: Speech Recognition (Microsoft Windows SAPI) Total Speech Recognition (Microsoft Windows SAPI)

Maybe I'm missing something in your question.

Dear, What about 1)Object Detection 2)Face Detection or recognization 3)Learning/Training process ? Whats the flow and algorithm behind the Phenomena?

There’s manuals to learn how to use the features you’re inquiring about in ARC. Here’s links with tutorials to get you started...

Camera device: https://synthiam.com/Products/Controls/Camera/Camera-Device-16120

cognitive vision: https://synthiam.com/Products/Controls/Camera/Cognitive-Vision-16211

generally, all controls have little tutorials you can browse to see how to use them in your ARC project.