wolfjung

hi guys and girls,

My daughter somehow got me into this robot obsession. (I'm normally biased towards chemistry sets for kids being a Chemical Engineer, though a robot looked more house trained and gave a challenge in mechanical and computer engineering)

Of course I jumped probably too early and purchased a Meccanoid and have already started to see the limitations. Big plans already to lobotomize the brain and upgrade to the Ez-robot platform. Further down the track try and tack on an Alan-AI head (that thing is cool).

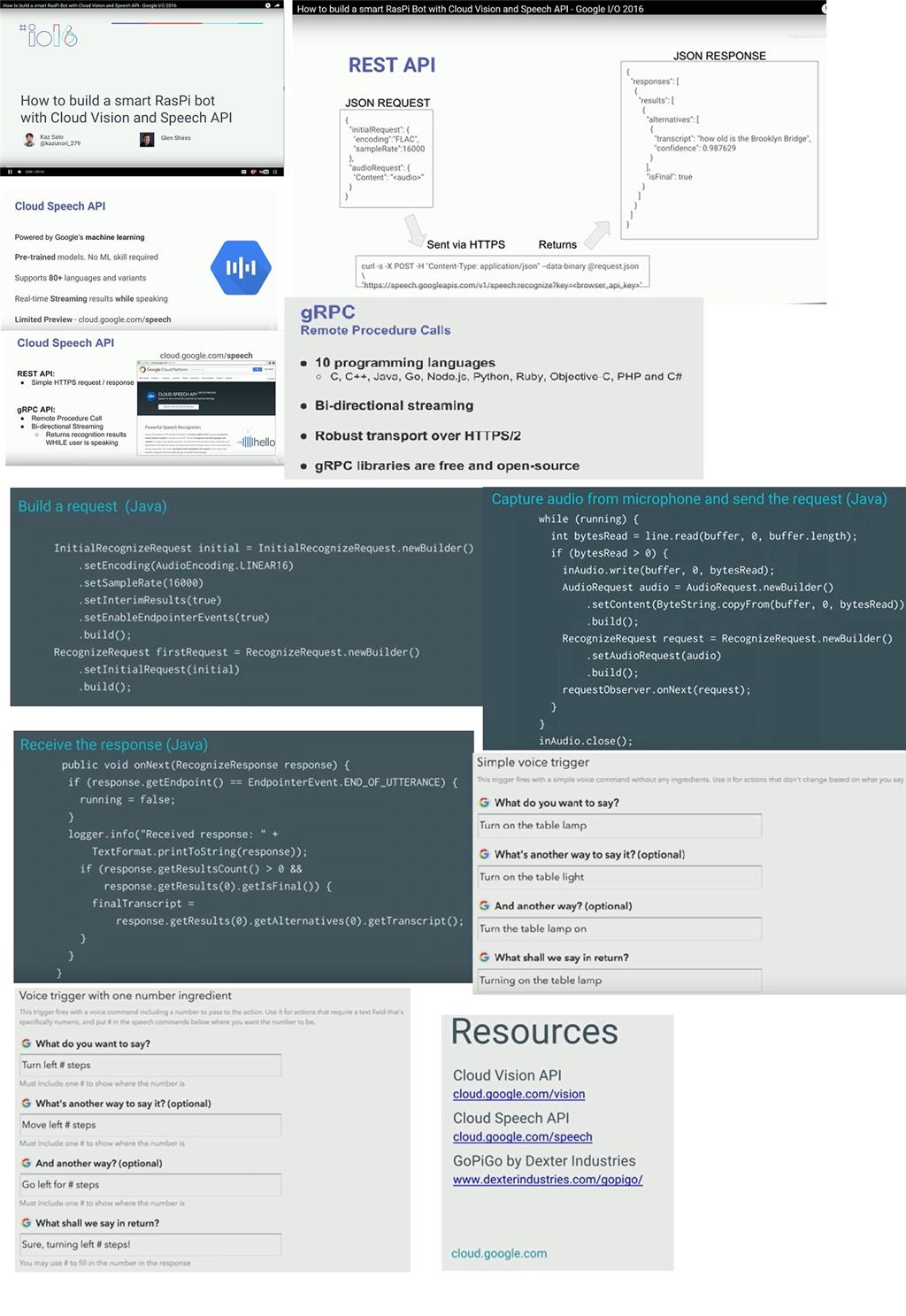

Though I digress, I have seen some demos on the Dexter, GoPigo robot where Google ran their Cloud vision and speech APIs. You could ask questions in many different languages and get answers to nearly any question (somewhat like Alexa) - It also looks like Alan has this capability (he is running Ez-robot it looks)

I'd like to see who out there has this working on Ez-robot (particulary the Google Speech Cloud), I'm a bit reluctant to launch into learning Python, Raspberry-Pi and whatever else is needed at the moment (time available and the learning curve necessary are the constraints- and my wife would divorce me if I dedicated the time necessary, I've already been warned about spending more time talking to an AI robot than her)

It has been an interesting journey so far and this site is fantastic. Its got me thinking about the complexities of the human body and just how nature through evolution has refined and perfected our nervous system and the programmed neurons that fire to make it all work. Just seeing how an arm and the hand movements work is a very deep & complex subject without even taking in to account all the other biological feedbacks happening at the same time. The journey to imitate this is a long way of from what I've seen, though this is what makes it exciting, and many new things are yet to come. So I look forward to hopefully sharing the journey with others on this website platform.

I have put in about 2 years of research on this type of topic, with a lot of work. The good news is that technology has advanced to the point that it is possible to have an AI in a robot similar to what is available through Google Home, Siri or the Amazon Echo.

I worked with IBM Watson, Google, Amazon, API.AI (bought out by google), Wolfram|Alpha, Stanford NLP and some others. I had started trying to develop something that was all local but it became limited because of the processing power necessary and the huge amount of data necessary.

Cloud is the way that all of this is going. This is a good thing simply because of the limitations that you would find yourself at when developing something locally. The issue with Cloud is that things change so quickly. Literally every day I would spend hours seeing what new was available. I would then spend weeks developing something that would use the newly available cloud API and then something else would come out that would replace what I had done. This tech is changing more quickly than I have ever seen anything change before. The other issue is that to make a robust solution you will need to depend on multiple cloud services and handle the passing of data between them. This means that things become slower than what you want them to be.

Here is an example... The vision that I had is that someone would be able to say something like "Please refill my Actos prescription". From this statement you could assume that the person had diabetes. The robot would then remind the person to check their glucose levels and take their medication. This seems easy enough, except that things like Google and Amazon don't understand medications or diseases. This requires Wolfram|Alpha or Watson. Both of these become expensive. I had looked at making my AI a product that could be used by many people. Unfortunately the costs of getting very accurate data became too costly to make it something that people would buy, especially when Google home and Amazon Echo were free to use once purchased. The same goes for Siri and Cortana and is the model that things are going to. I couldn't bank roll a solution like this.

So, the conclusion that I came to is that the technology will get there in time. For example, the Echo has had 6000 new skills added to it in under a year. There is a huge group of people developing for it. It allows you to order things, ask generic questions and get reminders or build lists. It can tie into many home automation products. It does a large majority of what most people would want to do. The interesting thing is that 90% of the skills that are downloaded to it are used for a very short period of time and then not used again. This concerned me about the longevity of this type of product, but for robots it is logical if it can do what you want it to do. Ultimately I decided to buy a $50.00 dot for my robot builds and let the many other people who are developing skills to do the work of adding new features. I haven't done it yet, but I suspect that the dot can be hacked pretty easily to allow it to be triggered by tapping into the button on the top of the dot.

The way that my system worked was that a Raspberry Pi 3 running Java code would handle all of the communication between all of the different services that were used. A plugin in ARC communicated to the Raspberry Pi 3 to send the request and receive the response in a variable in ARC. ARC would monitor the variable and when it changed would speak the variable.

The thing to remember is that when asking a question there could be a lot of information returned. The service that I was using would return a sentence or two that were designed to be read. The other data would also be returned so that I could do other things with it (like display a math formula or something like that). The reason that this is important is that a lot of the services (such as TTS services) charge based on the length of the text that is returned. This wasn't a huge deal for ARC as I just used the SAY function in ARC to say the text, but if you wanted to tie it into other things like phones, TV's or any other thing that doesn't have TTS built in, it could get pricey.

Okay, going back into my hole now. I hope this helps some.

Dave is definetly the guy to speak about these ai/speech cloud services! Thanks for the info dave.

One thing i'll add - of why ez-robot hasn't released an official plugin is due to licensing of the services. Each service costs money - they all cost money. So any plugin that ez-robot creates will have to be paid for somehow, by the user or by us. The software is freely distributed, so there's no revenue model for ez-robot to pay for a 3rd party cloud service for 50,000 (and growing) people - at least, not without us implementing a paid service for the software, but that won't make many people happy.

The short story is we're waiting for a ai/speech cloud service that...

On that note, it sure shouldn't stop anyone from creating a plugin using the fantastic walk-through tutorial!