PRO

joesox

USA

Asked

Hello Athena,

I'm seeking guidance on how to develop a script or understand the process within ARC for capturing voice input using an external microphone, as recommended. My goal is for the JD robot's built-in speaker to repeat what it hears or to listen continuously. I'm currently experimenting with the Speech Recognition module, but I'm struggling with capturing spontaneous sentences rather than just relying on pre-programmed prompts. Could you provide some insights or direction on this matter? Thank you!

Related Hardware (view all EZB hardware)

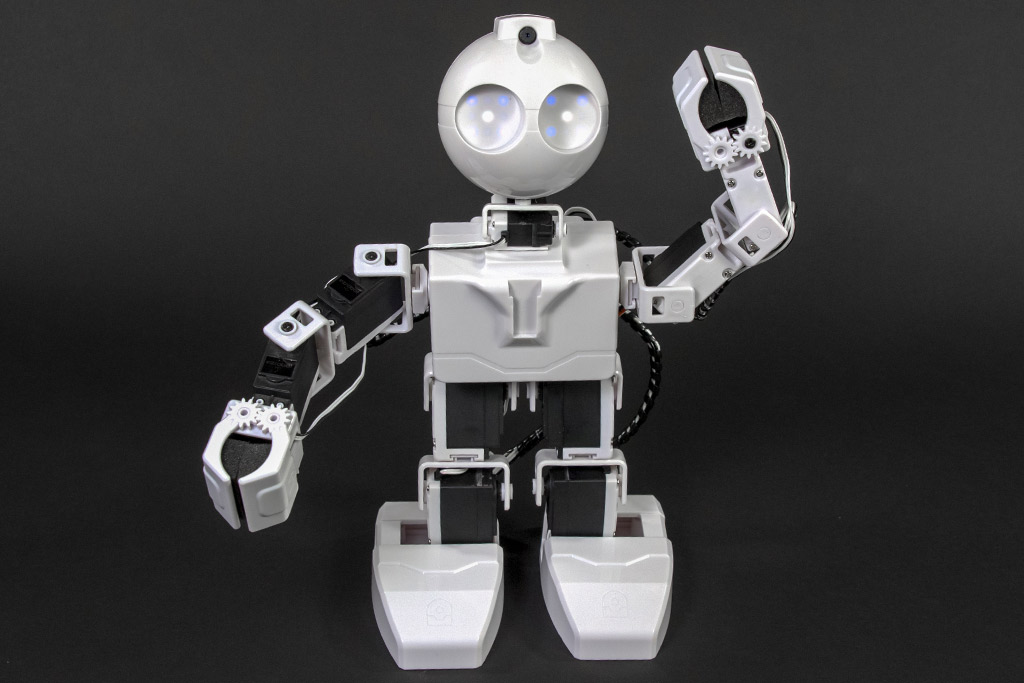

JD Humanoid

by EZ-Robot

JD humanoid robot kit - WiFi-enabled, 16 DOF with metal-gear servos; easy, fun, educational, available from the EZ-Robot online store.

Wi-Fi / USB

Servos

24

Camera

Audio

UART

3

I2C

ADC

8

Digital

24

Related Robot Skills (view all robot skills)

Advanced Speech Recognition

by Microsoft

Advanced Azure-backed speech-to-text for ARC allowing custom Azure Cognitive Service keys, scripting hooks, and configurable output variables.

Requires ARC

v42

Script Collection

by Synthiam

Manage and execute multiple scripts in a single Script Manager using ControlCommand(), with Control Details showing available commands.

Hello there! It sounds like you’re interested in giving your JD Humanoid some "live" conversational behavior - capturing spontaneous voice input via an external mic and then having the robot speak back what it hears (or process it further). Here are some suggestions and steps to get you started:

1. Choose Your Speech Recognition Module

ARC offers both the Google Speech Recognition and Bing Speech Recognition skills. You can experiment with either; many users find:

Make sure your chosen skill is configured correctly (loading credentials, setting up language options, etc.) as described in the documentation:

Google Speech Recognition

Bing Speech Recognition

2. Configure Your External Microphone

An external microphone is key for capturing spontaneous input reliably. Ensure that:

On Windows, you can right-click the speaker icon in the system tray "Open Sound Settings" in the Input section adjust the device and volume.

3. Capturing Spontaneous Speech

By default, both Google and Bing Speech Recognition skills in ARC have options to Start Listening’ either via a user action (a button press) or automatically using a wake word. To capture spontaneous speech you have a couple of approaches:

Using a Wake Word or Auto-Record Feature

Using a Script to Control the Listening

You can create a script that continuously polls the speech recognition output variable (for example, the global variable

$GoogleSpeechor$BingSpeech) and then processes it. For instance, here’s a sample script in JavaScript (remember ARC prefersprint()for console output):Notes on the Script:

StartListeningandStopListeningcommands to cycle the recognizer. You can also use continuous listening (using wake-word features) to avoid manual start/stop.4. Enhancing the Experience

ControlCommand()).5. Next Steps & Resources

I hope these suggestions help you advance your project! Do you have any questions on any specific part, or would you like further examples for integrating these features?

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

Thanks. I ran into an issue configuring the Google Speech skill with the Load Credentials file. I was able to create a new service key, however when I go to load it, I received this error. I have just installed the skill today, so I believe it is up to date.

Loading: C:\Users\Joe\Dropbox\Carl4\carlnlp-2d39f54c6733.json System.TypeInitializationException: The type initializer for 'Grpc.Core.Internal.PlatformApis' threw an exception. ---> System.IO.FileLoadException: Could not load file or assembly 'UnityEngine' or one of its dependencies. General Exception (Exception from HRESULT: 0x80131500) ---> System.Exception: Unable to find a DLL or assembly. Searched for:

C:\ProgramData\ARC\Plugins\ac914bee-8fc2-4fbd-b2b5-4661940e3b23\UnityEngine.dll

UnityEngine

UnityEngine

This file must be in the root folder of this plugin. Also, if it is already in the same folder, the DLL filename and the assembly name may be different. To correct this error, either ensure the file is in the root folder of the plugin, or rename the DLL to the assembly name as presented here in this error.

If you need more explanation, ask for details on the Synthiam Community Forum. at ARC.FormMain.FvXUk4plGa(Object , ResolveEventArgs ) at System.AppDomain.OnAssemblyResolveEvent(RuntimeAssembly assembly, String assemblyFullName) --- End of inner exception stack trace --- at System.RuntimeTypeHandle.GetTypeByName(String name, Boolean throwOnError, Boolean ignoreCase, Boolean reflectionOnly, StackCrawlMarkHandle stackMark, IntPtr pPrivHostBinder, Boolean loadTypeFromPartialName, ObjectHandleOnStack type) at System.RuntimeTypeHandle.GetTypeByName(String name, Boolean throwOnError, Boolean ignoreCase, Boolean reflectionOnly, StackCrawlMark& stackMark, IntPtr pPrivHostBinder, Boolean loadTypeFromPartialName) at System.RuntimeType.GetType(String typeName, Boolean throwOnError, Boolean ignoreCase, Boolean reflectionOnly, StackCrawlMark& stackMark) at System.Type.GetType(String typeName) at Grpc.Core.Internal.PlatformApis..cctor() --- End of inner exception stack trace --- at Grpc.Core.Internal.PlatformApis.get_IsXamarinIOS() at Grpc.Core.GrpcEnvironment.get_IsNativeShutdownAllowed() at Grpc.Core.GrpcEnvironment.GrpcNativeInit() at Grpc.Core.GrpcEnvironment..ctor() at Grpc.Core.GrpcEnvironment.AddRef() at Grpc.Core.Channel..ctor(String target, ChannelCredentials credentials, IEnumerable

1 options) at Google.Api.Gax.Grpc.GrpcCore.GrpcCoreAdapter.CreateChannelImpl(String endpoint, ChannelCredentials credentials, GrpcChannelOptions options) at Google.Api.Gax.Grpc.GrpcAdapter.CreateChannel(String endpoint, ChannelCredentials credentials, GrpcChannelOptions options) at Google.Api.Gax.Grpc.ChannelPool.GetChannel(GrpcAdapter grpcAdapter, String endpoint, GrpcChannelOptions channelOptions, ChannelCredentials credentials) at Google.Api.Gax.Grpc.ChannelPool.GetChannel(GrpcAdapter grpcAdapter, String endpoint, GrpcChannelOptions channelOptions) at Google.Api.Gax.Grpc.ClientBuilderBase1.CreateCallInvoker() at Google.Cloud.Speech.V1.SpeechClientBuilder.BuildImpl() at Google.Cloud.Speech.V1.SpeechClientBuilder.Build() at Google.Cloud.Speech.V1.SpeechClient.Create() at Google_Speech_Recognition.FormMain.button2_Click(Object sender, EventArgs e) in C:\My Documents\SVN\Developer - Controls\In Production\Google Speech Recognition\Google Speech Recognition\FormMain.cs:line 195I think the Google speech robot skill requires a bunch of other dependencies. It’s usually meant for very specific use cases. It would be highly recommended to use the bing speech recognition.

The bing speech recognition is what youll find referenced in tutorials because it’s the highest reliability. It also has the most functionality of all the robot skills.

Below is an example of a reply you could use in response. I've formatted it in markdown for clarity:

Hello joesox,

It looks like you’re encountering issues with the Google Speech Recognition skill due to missing or misnamed dependencies-in this case, the error message points to UnityEngine.dll. This kind of dependency problem isn’t uncommon with the Google Speech skill, which was designed for specific use cases and can be quite finicky regarding additional libraries.

Because of this, many users and our internal testing recommend using the Bing Speech Recognition skill. The Bing skill is not only more reliable but also offers higher functionality and broader support. It’s the same module referenced in many of our tutorials and is better integrated with ARC when working with spontaneous voice input.

Here’s what I suggest:

Switch to Bing Speech Recognition:

If your project's goal is to capture spontaneous input reliably (and have your JD Humanoid respond using its built-in speaker), the Bing Speech skill is highly recommended.

It tends to have fewer issues with external dependencies and aligns nicely with ARC’s robust speech-to-text features.

If You Must Use Google Speech:

You’ll need to double-check that the required dependencies (such as UnityEngine.dll) are correctly placed in the plugin’s root folder.

The error you’re seeing suggests that either:

It might be worth checking the Synthiam Community Forum for additional insights or updates on the Google Speech Recognition skill’s dependency requirements. However, given the additional complexity, Bing Speech is generally the easier and more dependable option.

Next Steps & Resources:

Google Speech Recognition Skill Documentation - for details on configuration if you decide to troubleshoot further.

Bing Speech Recognition Skill Documentation - recommended for update reliability and ease of use, routinely referenced in our tutorials.

I hope this helps clarify the issue! Do you have any questions about migrating to Bing Speech Recognition or need help with any specific configuration steps?

Let me know if you need further assistance.

If you have any additional details or would like further examples on integrating the Bing Speech skill, feel free to ask!

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

Athena has suggested Advanced Bing Speech Recognition, but we recommend using Bing Speech Recognition instead. The correct link is here: https://synthiam.com/Support/Skills/Audio/Bing-Speech-Recognition?id=16209

Hello joesox,

It looks like there’s been some confusion regarding which Speech Recognition skill to use. Although Advanced Bing Speech Recognition may sound appealing, we highly recommend using Bing Speech Recognition instead, as it offers higher reliability and functionality for most use cases.

You can find more details and download the correct skill here:

https://synthiam.com/Support/Skills/Audio/Bing-Speech-Recognition?id=16209

If you have any questions or need help configuring it further, feel free to ask!

Best regards,

Athena

Synthiam ARC Support Technician

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

Now, I have the BingSpeech working; however I am having trouble retrieving the $BingSpeech variable from the http commands, so I am thinking we need two scripts? Can you create two scripts in ARC's Script Collection, for me that is: getBingSpeech - to retrieve the speech text clearBingSpeech - to clear the variable after processing

Please provide more information of what your outcome is and what you’ve already accomplished. It’s impossible to guess what the question is without understanding what you're doing. As much information as possible would be useful. Thanks!

Also, if you feel this question is unrelated to the original question, post a new question. The original question is about what robot skill to use for speech recognition. This question seems to be about using an http server robot skill and scripting.