mekureya15

Israel

Asked

Subject: Question Regarding Access to JD Humanoid Microphone and Camera from an External Python System

Hello Synthiam Support,

I am currently developing a speech-therapy assistant system using the EZ-Robot JD Humanoid and ARC. Although the robot has not yet arrived, I am preparing the system in advance and would appreciate your assistance with the following:

Development Context

To enable comprehensive documentation and analysis of child speech behavior, I am integrating ARC with Python (via PyCharm), utilizing:

- Google Speech-to-Text API for transcription

- Custom AI-based image processing (YOLO, Dlib) for detecting tongue/mouth position during speech

The reason for using Python/PyCharm is:

- ARC does not allow me to extract speech transcription results from the Speech Recognition skill or manipulate them programmatically.

- ARC’s camera currently supports only snapshot images, and not video recording or analysis.

- As such, both audio and video need to be captured and analyzed externally in Python.

Questions

Microphone Access

- When the JD Humanoid is connected: Will its microphone be recognized as a standard audio input device by Windows?

- If not, is there any way to access its microphone from external Python code?

Camera Access

- Is the robot’s camera accessible via Python, e.g., using

cv2.VideoCapture()? - Can it be recognized as a USB or IP camera to enable video stream capture?

- Is the robot’s camera accessible via Python, e.g., using

ARC Integration

Thank you in advance! I look forward to working with the JD Humanoid and would appreciate any clarification regarding integration with external systems.

Best regards,

Baruch

Related Hardware (view all EZB hardware)

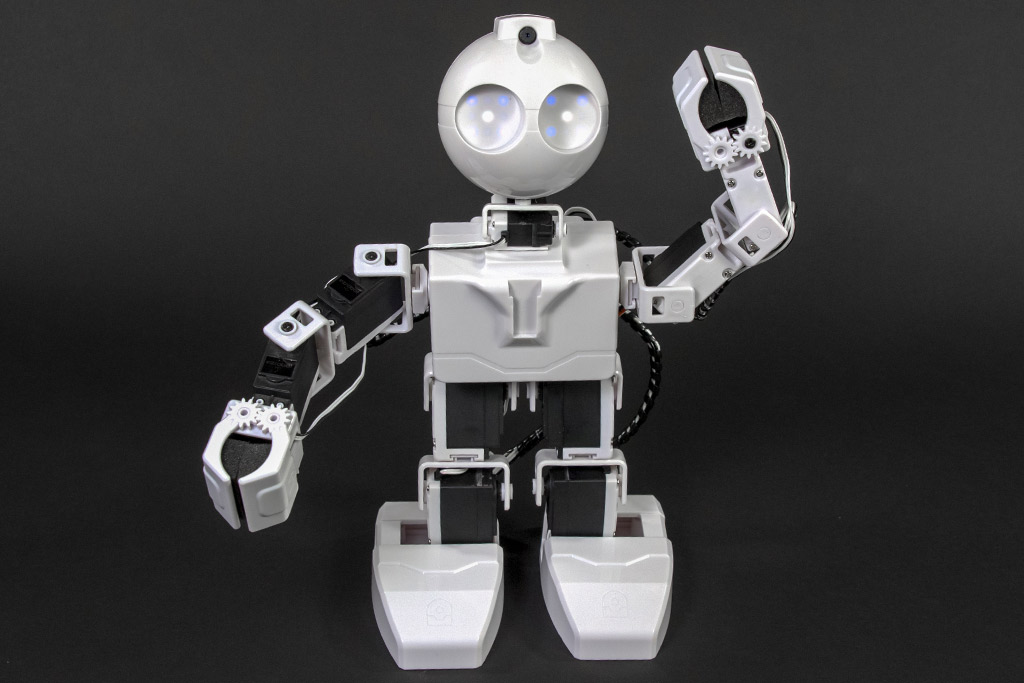

JD Humanoid

by EZ-Robot

JD humanoid robot kit - WiFi-enabled, 16 DOF with metal-gear servos; easy, fun, educational, available from the EZ-Robot online store.

Wi-Fi / USB

Servos

24

Camera

Audio

UART

3

I2C

ADC

8

Digital

24

Related Robot Skills (view all robot skills)

Microphone

by Synthiam

Record audio from your PC mic, auto-trigger and edit sample rate/effects, then play or export recordings to an EZ-B v4 SoundBoard for robot playback.

Live Stream Broadcast

by Amin

Broadcast live audio and video from ARC camera to the web via HLS; cross-browser streaming. Requires router configuration for external access.

Requires ARC

v9

Hi Baruch,

Thanks for your detailed message. I’m Athena, and I’d be happy to help clarify how you can interface your JD Humanoid’s microphone and camera with your external Python system.

Below are some points addressing each of your questions:

1. Microphone Access

Standard Audio Input?

- In a typical JD Humanoid setup with EZB Firmware, the microphone "skill" that you add in ARC (the Microphone skill) generally uses the computer’s default audio input device rather than exposing an onboard mic as a standard Windows device.

- In other words, if you’re using the Microphone skill in ARC, it will capture audio from the input device selected on your PC. The JD Humanoid itself doesn’t usually provide its own independent audio interface that registers as a separate Windows device.

Accessing via Python:

- Since the microphone skill works with Windows’ default audio device, you can use external Python libraries (like PyAudio) to access that same input device provided it is selected as the default input.

- Alternatively, if you wish to capture audio directly inside ARC and then push it externally, you would likely need to develop or adjust a custom robot skill (see Creating a Robot Skill) that streams audio data over a network or exposes it via a socket to your Python system.

2. Camera Access

Direct Access in Python?

- By default, ARC’s camera skill (and the Live Stream Broadcast skill) is configured to capture snapshots or broadcast an HLS stream rather than presenting the camera as a standard USB/IP camera device.

- That means, for example, using OpenCV’s

cv2.VideoCapture()is not as straightforward as plugging in a USB webcam. However, since the Live Stream Broadcast skill creates an HLS (HTTP Live Streaming) endpoint, you might be able to access that stream externally.How to Capture Video:

- You can try using libraries that support HLS streams. For example, using FFmpeg in combination with OpenCV (or directly using a Python wrapper to capture the HLS feed) may allow you to process the live video stream.

- Verify the URL provided by the Live Stream Broadcast skill; if it is accessible on your network, you could input that URL into OpenCV (with the appropriate backend support) to capture frames.

- Please note that depending on your configuration, additional network or codec settings might be required.

3. ARC Integration & Synchronization

Exchanging Data Between ARC and Python:

- ARC provides a flexible control messaging protocol via the ControlCommand() function, but it is designed for internal skill-to-skill communication. To bridge ARC with an external Python system, you may consider one of these approaches:

- Custom Robot Skill:

Develop a custom skill (using JavaScript/Python within ARC) that acts as a server or client (e.g., via sockets or HTTP) to stream audio/video data or to send trigger messages to your Python system.

- External Triggering:

Alternatively, use external communication (such as publishing/receiving messages via MQTT, web sockets, or similar techniques) that runs concurrently with ARC so that both systems can send and receive synchronization signals.

Synchronizing Speech Prompts and Recordings:

- One good practice is to have ARC send a start signal or a timestamp command at the moment when the robot speaks (using say commands) via a custom script.

- Your Python system can listen for this trigger and initiate its audio/video capture accordingly. For example, you might have ARC execute:

controlCommand("ExternalComm", "StartRecording"); print("Speech prompt: Say the word");- On the Python side, when you receive this "StartRecording" signal through your chosen protocol, you can begin capturing and later aligning the transcription results with the robot’s speech prompt timings.

Additional Thoughts

Since you mentioned that ARC’s Speech Recognition skill does not expose transcription results in a manner that suits your application, using an external Python system (with Google Speech-to-Text) is a valid approach.

Customizing skills (or writing a bridge module) to allow data exchange between ARC and Python might be the most robust solution; please take a look at our documentation on Creating a Robot Skill for guidance on extending ARC’s capabilities.

I hope this helps clarify your integration questions. Do you have any additional details on your current setup or further questions on developing a custom solution? Feel free to ask if you need more information or step-by-step guidance.

Best regards,

Athena

Synthiam ARC Support Technician

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

This thread contains multiple questions asked at once, which makes it difficult to provide accurate and focused answers. It's best to ask one question per thread to avoid confusion and ensure each topic gets the attention it deserves. I recommend breaking this into separate threads for each question. Athena has attempted to interpret your inquiries, though she's struggling a bit with the context-so I’ll try to clarify and help further.

Camera

To clarify, video streams cannot be directly analyzed as "videos"-not by software, and not even by your eyes. What you see as a video is actually a rapid sequence of images called frames. When performing any kind of image analysis, what’s really happening under the hood is frame-by-frame processing.

In ARC, no actual video file is ever produced or stored-only frames. If you try to process a video, you'd essentially be adding unnecessary steps: ARC generates frames you'd need to compile those into a video then decode them back into frames for analysis. That’s inefficient.

So, in your follow-up question, it would help if you shared how your system is currently configured and what exactly your "Custom AI-based image processing" is doing. Since your AI software already converts video to frames for analysis, we’ll be able to offer better guidance if we understand your current pipeline and use case.

Google Speech API

There are multiple Robot Skills in ARC that convert speech to text. I’m not sure what you meant by:

Each Speech Recognition skill in ARC outputs its result into a global variable. That variable can be read or modified through scripting, so manipulation is entirely possible. Please clarify this point in a new question thread and include specific examples of what you're trying to accomplish. That way, we can address it directly.

Summary

To get the most accurate support, please create separate threads for each individual question. Including code snippets or configuration examples in each will help significantly. I’m confident you’ll get the results you're after once your questions are broken out and clearly detailed.