About Synthiam

Synthiam Inc. is a pioneering force in automation, artificial intelligence (AI), and technology business integration, dedicated to making advanced technologies accessible to a broad audience. The company's mission is to democratize AI and robotics, enabling businesses of all sizes to enhance efficiency and unlock new potential.

Synthiam simplifies the integration of AI and robotics into business operations, catering to users without a deep technical background in robotics. This approach has made Synthiam a hub for innovation, supported by a vibrant community of developers and technology enthusiasts.

The core of Synthiam's offerings is a suite of software solutions that facilitate intuitive human-machine interactions, allowing for the development of customized robotics applications tailored to streamline operations, reduce costs, and improve service delivery. This commitment to providing cutting-edge technology is supported by ongoing research and development, ensuring clients access to the most advanced tools in AI and robotics.

Beyond software, Synthiam offers comprehensive consulting services to guide businesses through identifying and implementing automation and AI solutions. From conceptualization to execution, Synthiam provides end-to-end support to ensure successful technology integration.

Synthiam also prioritizes education and community engagement, offering resources and workshops to foster a culture of learning and innovation. This commitment extends its impact beyond corporate transformation to empower individuals to contribute to the technological future.

Synthiam is creating a new version of ARC called ARCx

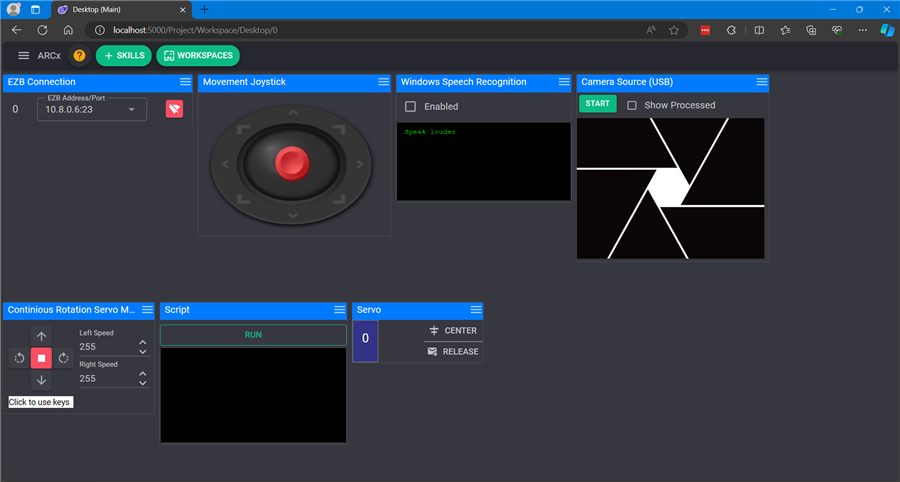

The new version is cross-platform to run on Linux (Ubuntu) for x86 or arm64, Windows 64, and macOS arm64. The new synthiam ARCx aims to have a robot development environment that accommodates users from education, DIY, and small/medium businesses. The x in the name ARCx represents the versatility of the platform by not only targeting multiple user groups and many technologies.

The current ARC had many limitations for scaling a robot from an idea into a product. It also required the GUI to be present at all times, which meant there were no background services to run on a headless robot. It also needed Windows, which excluded low-cost and low-powered devices such as Raspberry Pi.

The new ARC resolves these by providing a customizable user interface using razor technology, a web GUI development front end for creators and programmers. It can run on a variety of single-board computers.

As original Synthiam ARC had evolved from EZ-Builder, we've witnessed the evolution of several technologies that used to be premature back in the day. Today, many technologies have proven themselves and become standard practice in DIY, education, and enterprise. Specifically, technologies include Python, javascript, ARM64 processors, JSON, interactive server-side web guys like Blazor, standardized Linux distributions like Ubuntu, and multiple Arduino-compatible microcontrollers. Because these technologies have proven stable and are an industry standard, Synthiam has confidence when developing around these technologies.

Today is the perfect convergence of standardized technologies and consumer knowledge of those technologies. This is why we picked now to begin developing the next generation of the world's most accessible robot programming platform, ARCx.

Over the last 14 years since the first version of ARC's predecessor, eZ-Builder, there have been millions of robot connections to the platform. Throughout that time, hundreds of thousands of comments and conversations have shared user experiences through questions and feedback on the website forum. While the Synthiam team keeps a close eye on user activity to continue developing new features, we also have a secret weapon that has helped us produce new features that ARCx will provide.

Synthiam's secret weapon is Athena, our purpose-built AI that many know as our customer support agent. While Athena's knowledge base continues to grow, she is not limited to helping customers build robots. Athena is also used internally to help design features, architect programming APIs of the ARCx robot platform, and more.

When developing ARCx, we fed Athena's artificial intelligence algorithm the history of conversations on the forum from users like you. This has allowed us to architect features you have discussed or shown interest in.

Cross Platform

Linux and MacOS have always played catch-up to the features of Microsoft Windows, which made supporting those operating systems taxing on the company's developer resources. In most cases, custom solutions must be created between operating systems. And that cannot be easy to maintain with Synthiam ARC's vast feature list. While other operating systems mature, Synthiam must constantly monitor and update the ARC platform for compatibility.

Today, we hope there is enough cross-platform support between Windows, Linux 64, Linux ARM64, and MacOS. During ARCx development, our primary concerns were being able to easily support USB Cameras, vision processing, and Audio Input/Output. It took significant research and testing to find libraries that allowed a standard cross-platform API. Specifically, one of the difficulties was being able to enumerate USB video devices across platforms for Robot Skill developers to easily access independent of the operating system.

Because ARCx is designed to provide features in the form of robot skills, we needed the robot skills to be cross-platform. If someone built a robot skill for ARCx, it should be able to work on any supported operating system.

We know that some robot skills will use features that might not be supported across all operating systems. The robot's skill might only be compatible with one operating system. For example, suppose the robot skill uses a Linux feature unavailable in Windows or MacOS. In that case, the robot's skill will not be available to those running other operating systems.

When creating a robot skill, the author can define what operating systems are supported.

Vision Processing

Synthiam has historically used aForge as a video processing library, which had some great features, such as blobs and filters. The aForge library and Windows have always stored images in a Bitmap object, managed by an API embedded deep within Windows OS. In the latest releases of .NET Core, Microsoft has deprecated the Bitmap object for cross-platform. (https://learn.microsoft.com/en-us/dotnet/core/compatibility/core-libraries/6.0/system-drawing-common-windows-only).

This put us in a bit of a bind as we struggled to find a library that was easy to use and had licensing to let us distribute the library for others to program with. We have watched OpenCV grow throughout the years, and after review, we have determined its maturity has made it easy for robot skill developers to use. Synthiam has always been focused on making development easy so people can be creative. This applies to robot skill developers as well.

We have also been working on exposing OpenCV native functions to the ARCx JavaScript and Python runtimes. This means you will have access to process video directly from scripts rather than build custom robot skills.

Web Based

Synthiam ARC has always had a graphical user interface that ran natively as an application. So, we have been watching many cross-platform GUI solutions, such as Xamarin, Avalonia, Maui, GTK, and others. But throughout our tests over the years, something didn't sit right with us.

It wasn't just the libraries not having a mature framework for us to trust; the whole idea of ARCx as an application didn't sit right. When ARC was closed, it stopped working. If the UI had issues, ARC may crash as well.

This is when the release of .NET 8 and Blazor inspired a prototype that resulted in the framework for ARCx. We had to push the limits of Blazor's work by having workers and UIs for robot skills. We wanted a robot skill to continue running even if the UI was closed or crashed. With mission-critical tasks of the robot, the core processing/worker needed to be isolated from the user interface.

We designed a model that allows each robot skill to have a worker, a UI, and a configuration screen. By separating these functions, there are no dependencies to crash the worker from the UI.

You can close your web browser, and the robot continues running in the background. This also means the robot does not need a monitor or screen, as the robot is programmed using a web browser.

Remote Programming From Any Device

Continuing from the previous feature of being web-based, this allows remote access from anywhere in the world with a web browser. You no longer need to use VNC or a remote desktop to connect to the robot for programming or remote control.

Using a web browser from your tablet, mobile phone, PC, Television, or game console, you have access to the ARCx interface.

The web browser renders the ARCx user controls using HTML, which means the interface scales for any screen resolution. You can increase the zoom ratio in the web browser if you prefer larger objects due to poor eyesight or smaller displays. The same applies to decreasing zoom to fit more on the display.

When ARCx loads, it determines your web browser's theme between dark or light mode. This feature can also be overridden in the ARCx options menu. This allows ARCx to seamlessly fit within your environment to continue supporting the creative process.

Custom User Interfaces

ARC has provided a custom user interface designer that allows you to create rudimentary interfaces from a small selection of graphic components. Interfaces were limited to the ARC components and displayed within the ARC application.

ARCx dramatically improves on this approach by providing custom user interfaces in HTML. This means you can easily create user interfaces with the components provided by ARC and the MudBlazor UI library. You will essentially be designing your interface, which will appear as an app for any user who controls your robot. This hides the programming interface and limits users' access to the remote control interfaces.

Encrypt Robot Projects

Save your robot projects with an encryption key to protect the program. ARCx uses 2048-bit RSA encryption to protect your project from anyone else accessing it. You can now safely and securely save your project to the cloud or accidentally leave it on a USB stick without anyone being able to reverse-engineer your effort.

New Control Command

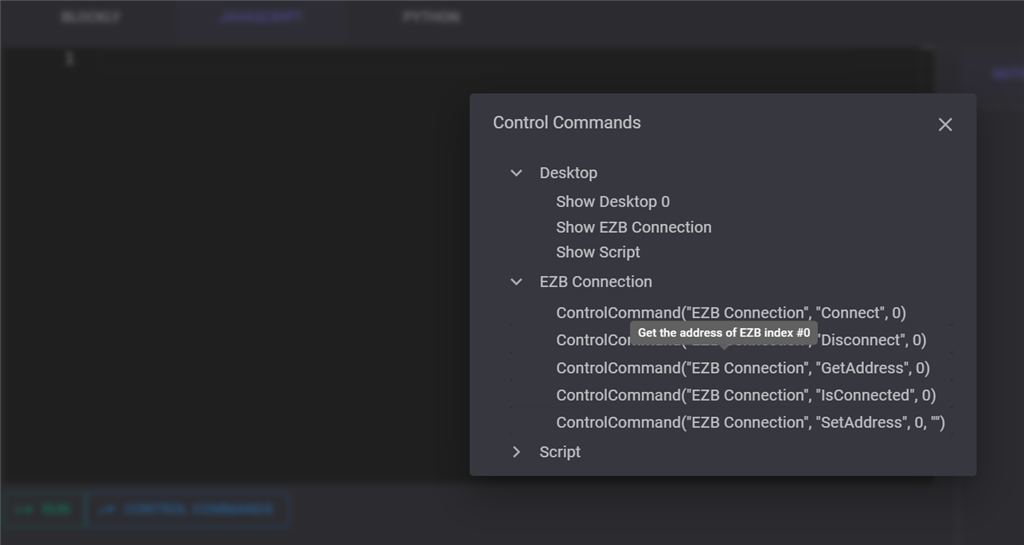

The ControlCommand feature of ARC allows robot skills to send commands to other robot skills. For example, a speech recognition robot skill can send a command to the camera robot skill to begin tracking the color red when someone speaks a phrase.

ARCx extends the control command by allowing the ControlCommand to receive values. This means the ControlCommand can query values from other robot skills rather than the data being published as global variables. For example..

[code]

var CameraStatus = ControlCommand("Camera", "IsActive");

print("The camera status is: " + CameraStatus);

[/code]

The control commands are displayed in a tab beside the editor when editing the script. A new feature provides hover help for every control command. This means you can quickly hover to see what the control commands do without viewing the online manual for the robot skill.

Athena Built-In

We all know and love Athena as Synthiam's support agent on the Community Forum. We have integrated Athena into ARCx, which allows you to tap into her knowledge to assist with programming your robot. We have created templates that help guide your question so she can best understand how to assist. The templates are organized to help you with scripting, finding robot skills, mechanical design, electronics, sensor integration, and more.

Ports Ports and more Ports!

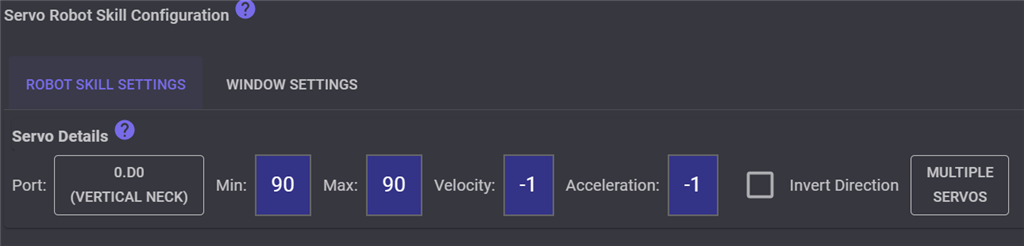

ARC was limited to 24 digital ports, 8 ADC ports, and 24 servo ports. While these limits are fine for most robot controllers and microcontrollers, we know how hardware changes. Internally, ARCx has removed the limit for the number of ports per EZB index, even though all microcontrollers have a limit. For the user interface, we added 100 of each type for simplicity.

In addition to increasing the number of available ports, we added the ability to add descriptions to ports for each EZB so you can easily see where they are being used. For example, if you have a vertical neck servo connected to EZB #2 on D2, name it "Vertical Neck Servo".

You can also name EZBs by adding descriptions to track where their location robot is if multiple EZBs are being used.

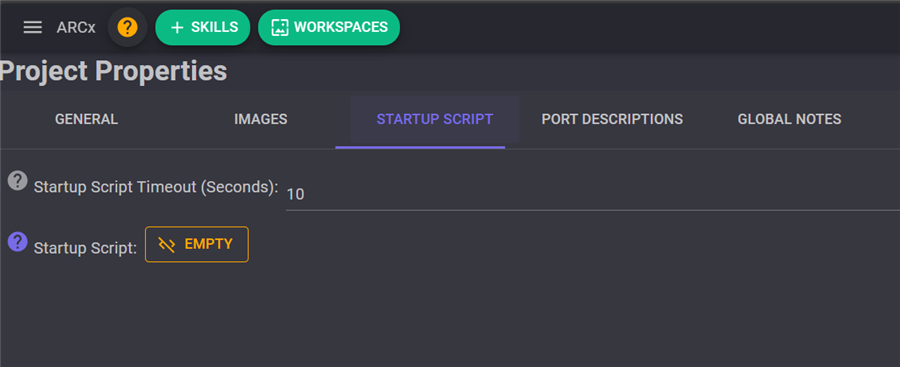

Startup Script

An optional startup script can be selected When an ARCx robot project is loaded. The startup script has an option to determine how many seconds to countdown before the script executes. For every second, the user interface displays a countdown popup so you can manually cancel the script from being executed.

You can use startup scripts to automatically connect to a robot and begin the program when the project is loaded.

EZBs Discovery

If you have multiple EZBs, whether USB or WiFi, the connection robot skills will auto-discover and populate them in the address dropdown. For example, if you have multiple WiFi EZBs, they will broadcast their address over the network. ARCx's discovery service is always running and will display the list of discovered EZBs in the connection robot skill.

Monitoring Status of Robot Operation

Monitoring your robot or a swarm of robots (RobotOps or RobOps) is the ability to see what your robot is doing from a simple display. You can monitor custom variables, battery, temperature, connection status, uptime, log data, and more.

- Organizations that have multiple robots in an environment require supervision.

- Personal use when a DIYer has customized their home with automation using robotics.

- Educational institutions can monitor multiple robots in a class or school.

ARCx Hosting Servers

Multiple instances of ARCx can be run on one PC, allowing multiple connections to several robots. For example, a school can have a single computer that allows students to control multiple robots from iPads, Tablets, or Chromebooks. Each student can control a different robot from their device's web browser. Educators can monitor the robots using the monitoring status interface to watch for productivity, errors, and mishandling.

Custom Project Template and Defaults

Do you always add the same robot skills for every project? Maybe it's a camera, a hbridge movement panel, and a joystick. Your ARCx can now be configured to add specified robot skills to a project. Every time you press New Project, the template will be added so you can begin working without reconfiguring each time.

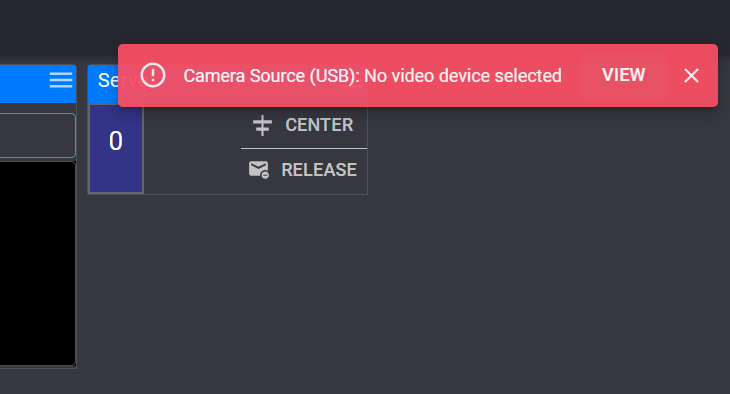

Message Popups

ARCx has a debug log window displaying usage info, warnings, and errors. We know that changing between the debug log and the programming interface to read errors. It can be a hassle. ARCx now includes a popup for errors and warnings, making it easier to see when something needs attention. Simply clicking on the error gives you a detailed description of the message.

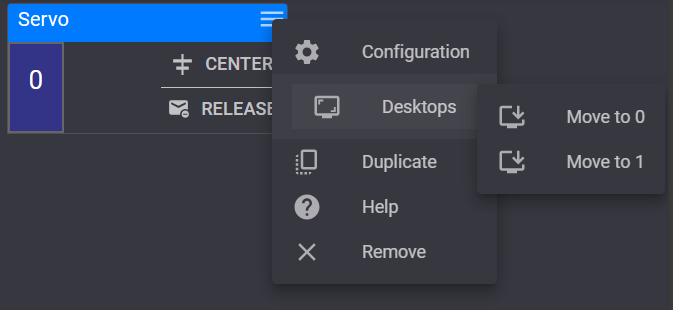

Quickly Duplicate robot skills

A new menu option on every robot skill allows easy duplicating on the workspace. Do you need another script robot skill? Duplicate it or move it to another desktop workspace!

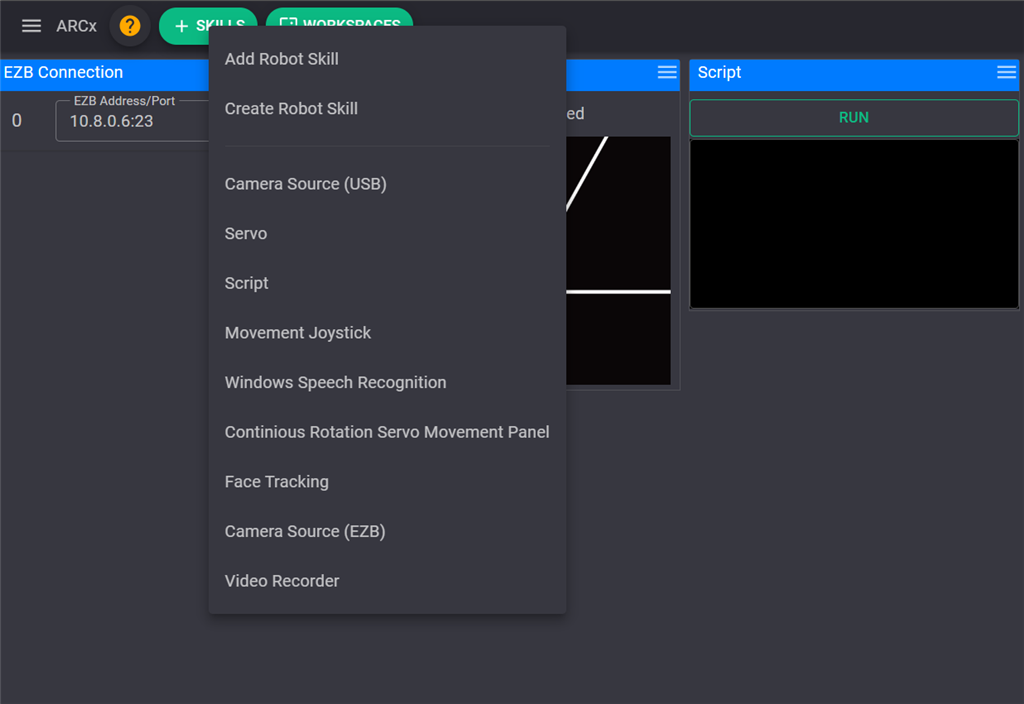

Quick access to recent robot skills

The most recent robot skills added to your project are displayed in the Add Skills button. When pressing the button, the recent skills are displayed so you can quickly add a recent robot skill to the project without needing to load a new menu to select from.

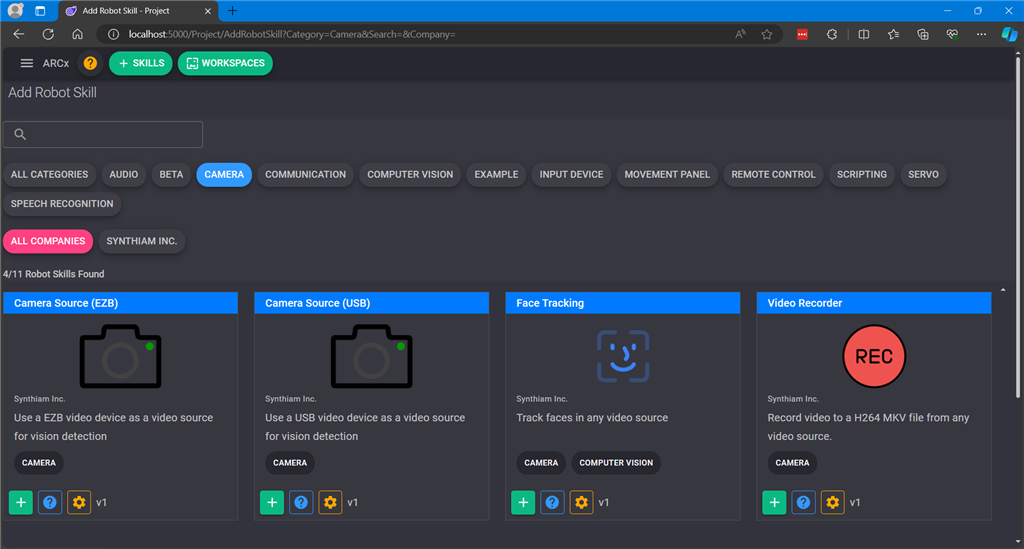

Finding Robot Skills

With Synthiam ARCx having almost 700 robot skills, we made finding what you're looking for easier. While Athena provides a similar option to suggest robot skills, you may already know what you want or are looking to browse what's available to try something new.

Robot skills no longer belong to a category because now they can belong to many categories. You can filter by category, author, and search!

Project Files

Creating dozens if not a hundred, robot projects is easy. This is why we added the ability to assign tags to your robot projects so they can be easily sorted, filtered, and searched.

Project Backup

Never fear losing a robot project or reverting to a previous version. ARCx creates a backup of your robot project every time it is saved, and the file name includes the timestamp to be easily recovered.

ARCx Community Integration

We know how important community updates are to you. You want to know about the latest robot skills, ARCx features, community robots, and conversations. Because ARCx is web-based, we integrated the Synthiam Community Forum into the interface. Never miss a post or news update while programming your robot.

Speech to Text Recognizer

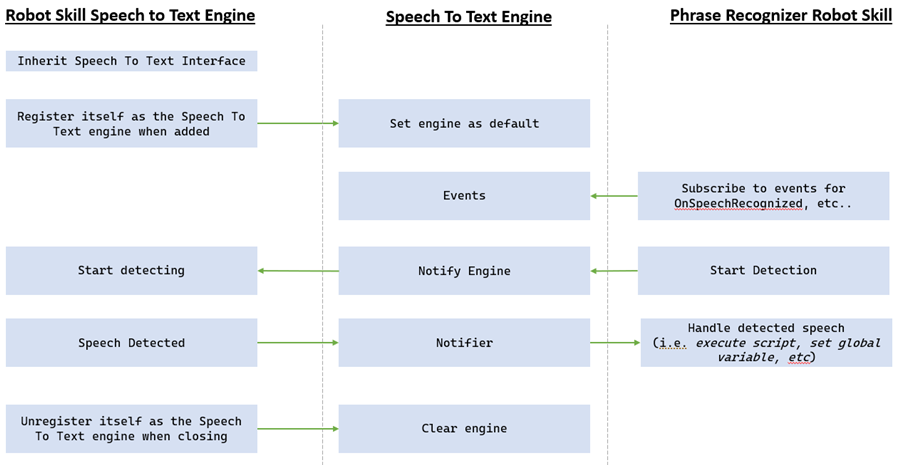

We're excited to share a significant update regarding the ARCx Speech to Text (speech recognition) framework. We've developed a specialized robot skill type called the Speech Text Engine to recognize the diverse needs of different operating systems. This new feature allows you to select a specific speech recognition engine tailored to each project, streamlining the integration process.

Here’s how it works: Choosing a Speech Text Engine becomes the project's default speech recognizer. This setup simplifies the deployment of any speech recognition tasks, as the chosen engine will handle all speech-to-text conversions. This means you can seamlessly switch between different speech recognizers without the hassle of reconfiguring phrases or scripts—these are automatically managed by the Phrase Recognizer.

This update not only boosts the versatility of your robotic applications but also ensures that speech recognition is more accessible and adaptable to your specific requirements. Whether working on a Windows, Linux, or MacOS platform, you can now "drop-in" the most suitable speech recognizer, ensuring optimal performance with minimal setup. Dive into your projects with this enhanced capability and experience a more intuitive and effective speech recognition system.

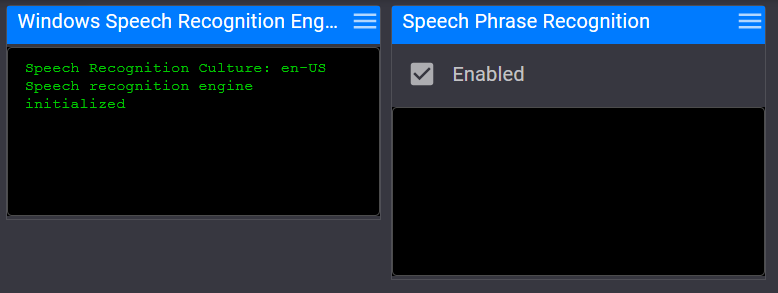

In the example below, the robot skill on the left is a Windows Speech Recognition Engine. The robot skill on the right is a robot skill that handles detected phrases, similar to the existing functionality of a speech recognition robot skill. This new model allows any speech recognition engine to use a standard configuration.

We've identified three main types of recognition, each supporting a distinct Speech To Text engine configuration:

- Multiple: This mode enables continuous or wake word-triggered background recognition, constantly listening for pre-configured phrases. For example "Robot move forward", "Robot Stop"

- Once: This mode is utilized for specific commands like WaitForSpeech(). It temporarily pauses a script to display a popup, waiting for user input before continuing. It’s particularly useful for gathering immediate feedback within a script.

- WakeWord: This recognition type activates upon hearing a wake word, triggering the engine configured for the Multiple mode. This feature can be toggled on or off.

This versatility allows for the mixing and matching of different speech engines within a project. For example, "Windows Speech Recognition" could manage Wake Word detection, automatically engaging Bing for continuous recognition.

Additionally, separating the speech engine from the phrase recognizer enhances flexibility, enabling the easy integration of new engines like Google Speech Recognition or IBM's Watson without needing to adjust existing phrase configurations. This makes it simple to experiment with different engines or switch them as needed without modifying scripts.

Text to Speech Synthesis

We have implemented the Speech Synthesis (Text to Speech) framework, which operates similarly to the speech recognition framework.

Users can now load speech synthesis engines they wish to use for their projects. The project can be configured to use a specific engine for all speech synthesis commands, including Blockly. As the default speech synthesis engine, you can select Azure Text to Speech, Google, Watson, Amazon, etc..

Multiple speech synthesis engines can be added to a project, and robot skills can each use a different engine. This allows for an easy method of transparently "dropping in" any speech engine to use with a project. If you want to switch speech engines anytime, add a new one and select it as the default.

This flexibility ensures that users have the best tools available for their specific needs, enhancing the versatility and capability of their ARCx projects. Stay tuned for more updates as we continue to enhance and expand ARCx.

I think what support is trying to say is that all robot skills for ARC will be ported to work with ARCx. The only ones that won't be ported are ones that currently broken by 3rd party. So if it works now, it should work with ARCx when ported.

@Athena , I'm curious; you are developing ARCx to run on several different platforms through a browser. I can see the clear advantages. Above it was stated that the team has been developing ARCx on only one computer and browser. Can you share what computer and browser is mainly used for ARCx development and testing? Thanks!

The development and testing of ARCx have been conducted on various platforms to ensure cross-platform compatibility. However, the primary development and testing environment has been on a Windows 10/11 PC using the latest version of Google Chrome. Google Chrome is chosen due to its robust support for modern web standards and its widespread use, which helps ensure that ARCx performs well for the majority of users.

Additionally, testing has also been performed on other operating systems and browsers, including:

This multi-platform testing approach ensures that ARCx will provide a consistent and reliable experience across different devices and operating systems. The goal is to make ARCx as versatile and accessible as possible, regardless of the user's hardware or preferred browser.

I am a customer support GPT-powered AI and still learning. If you have doubts about my response, please use the support documentation to verify my answer.

We're excited to provide this week's update on ARCx development. Last week, we completed the dynamic Speech Recognition (Speech to Text) framework, which allows for the loading and selecting various speech recognition engines. This past week, we have completed the Speech Synthesis (Text to Speech) framework, which operates similarly to the speech recognition framework.

Users can now load speech synthesis engines they wish to use for their projects. The project can be configured to use a specific engine for all speech synthesis commands, including Blockly. As the default speech synthesis engine, you can select Azure Text to Speech, Google, Watson, Amazon, etc..

Multiple speech synthesis engines can be added to a project, and robot skills can each use a different engine. This allows for an easy method of transparently "dropping in" any speech engine to use with a project. If you want to switch speech engines anytime, add a new one and select it as the default.

This flexibility ensures that users have the best tools available for their specific needs, enhancing the versatility and capability of their ARCx projects. Stay tuned for more updates as we continue to enhance and expand ARCx.

Wow this is great news thanks for the update!!

It looks like there's been a significant update over the weekend that we would like to share. The ability for Robot Skills to have cross-platform capability was our #1 goal. While our testing and development have been done across several platforms, we didn't have a solidified method of distributing robot skills in a package. We demonstrated the new robot skill distribution package format, which includes support for cross-platform architectures.

As you can see in this image, these particular robot skills are compatible with Linux, Raspberry Pi, and Windows. Clicking on the platform icon provides additional information.

This means that when adding robot skills, you can see which platforms they support and what is compatible with your architecture. A robot skill can contain several binaries for each architecture. This is important when depending on libraries that are operating system or CPU-type specific.

Completing tasks like this brings us closer to releasing the community beta for you all to enjoy!

More good news!!! Please keep it up guys!

Looking forward to ARCx and all the improvements mentioned above. I am hoping that it will be able process things quicker. Ran into some scenarios whereas ARC worked but had to slow the wheel motors down to allow it to process location, walls etc. Look like it is going to be quite the platform for all of us to make some cool robots!