Mac

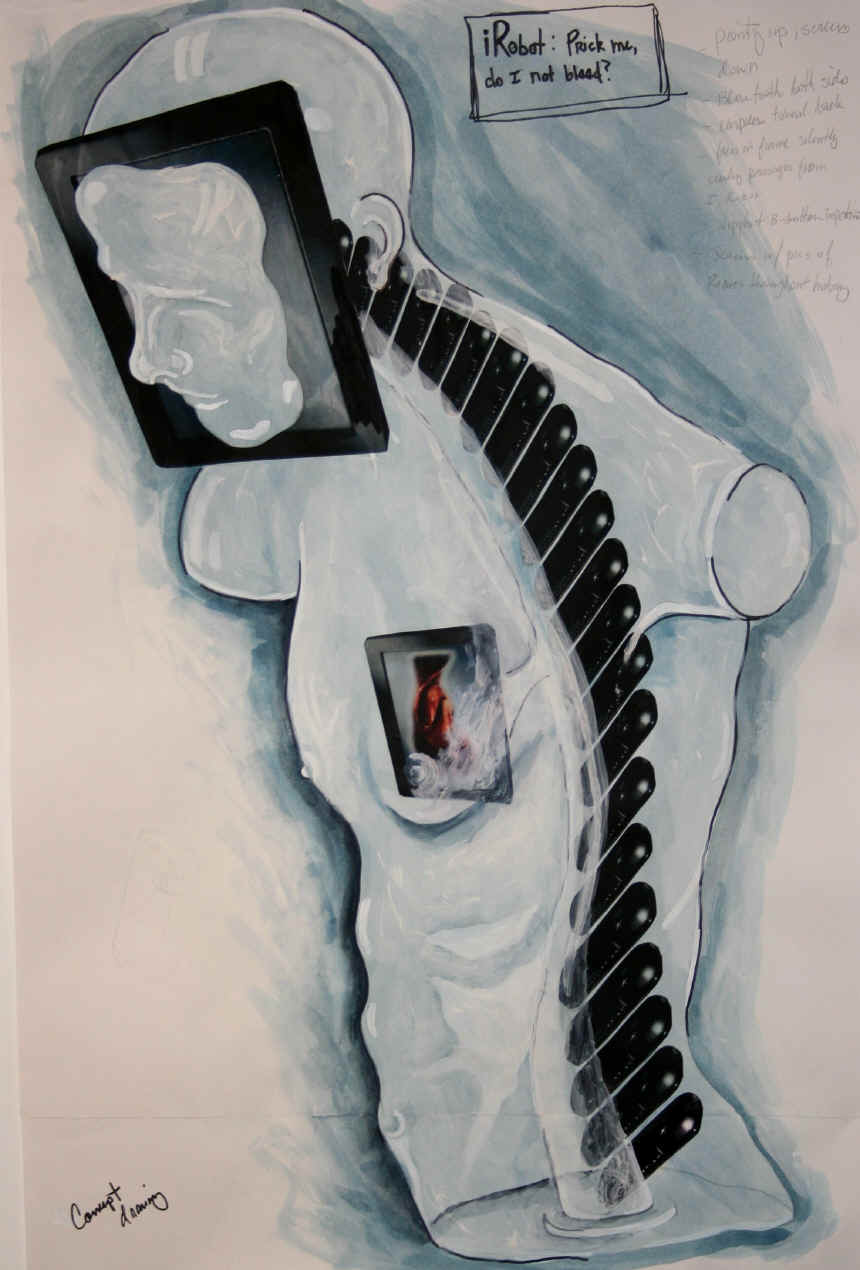

Hello everyone, I'm working on a robotic sculpture called "iRobot: Prick me, do I not bleed?" It's part of my series "The Classics" http://studio407.net/recent_work.htm

The robotics are pretty simple, the head/nook/face uses face tracking with the camera and servo and eventually I will have the torso swivel to face the viewer and I will implement speech.

But my issue is, seeing as it may end up in an art exhibition or two, I would like the sculpture to be autonomous. The "plan" was to have an android tablet installed in the support pedestal running a custom mobile app. But, am I understanding that the mobile app would be an adjunct to the PC running ARC and not the main processing unit? In other words, will I still need a laptop running windows and ARC communicating with the EZb v4 along with the app?

If this is the case, would, say, a Microsoft Surface Pro 4 (i5 processor) have the ability to run ARC, be installed in the pedestal, and make the sculpture autonomous?

I have an "in" with Barnes and Noble (my wife is the store manager, lol) so I could get an extremely good price on Galaxy Tab S2 Nook which has an octocore processor but ARC for the PC won't run on the Android, true?

This is my first post. I love that DJ had the foresight and vision to take all the scattered C++, Arduino, and Processor code and package it in an easy to use graphical interface.

No... You only need a PC and ARC in order to develop your mobile app... After that you can just your your mobile android tablet or phone. No need for a separate laptop running ARC...

Very cool and very, very creative! I love your concepts and work. I hope you continue to share this with us.

What Richard R said. He nailed it.

Excellent, excellent news, guys. Thanks so much. And thanks for the kind words, Dave. I've been watching your progress on the B9 and I have to say, what you're doing is.....intimidating. But in a really good way. I have a '71 Olds Cutlass that I've been restoring in my garage/studio and I've been through all three seasons maybe 12-15 times; watching as I work. So now I lovingly refer to the Olds as the Jupiter III :D

I have a '71 Olds Cutlass that I've been restoring in my garage/studio and I've been through all three seasons maybe 12-15 times; watching as I work. So now I lovingly refer to the Olds as the Jupiter III :D

Again, thanks for the insight and thanks for the info, Richard. I'll update this thread as I make progress. I have to say this has been the most ambitious artwork I've taken on.

-Mac

I've been through the tutorials and watched the vids but I don't understand how to control the camera in the mobile app. I don't see how to add the control tabs (tracking, color, device, motion, etc) in the mobile interface. Is there a thorough tutorial that I'm not finding?

@Mac,

I would recommend a mini PC or a Microsoft Surface (Intel) with Windows 10

Android version: Camera control i.e. face tracking is not implemented although you can access the EZB camera feed.

Android version: Text to Speech output is only supported on the tablet speaker (no output on EZB speaker i.e. SayEZB)

Speech Recognition not implemented.

There are other limitations, only relevant if you will use them.

See https://synthiam.com/Tutorials/Help.aspx?id=196 for the supported mobile controls. I agree with ptp, a Windows computer is better suited for an autonomous robot.

You can get small, DC powered windows computers or inexpensive tablets that are more than capable of running ARC.

See https://synthiam.com/Community/Questions/8714 for a good discussion of what is available.

Alan

Face tracking on android or iOS is actually better than PC because it uses hardware processing.

Mobile control capabilities can be viewed on the mobile interface builder manual page, which lists the supported tracking types.

The tracking methods are enabled or disabled using control commands. You can add check boxes to enable or disable the tracking type. View the six or jd project for example.

Lastly, the activities section in the learn tutorials has examples of how to use control command. So does every other tutorial involving eZscript.

I'm on my phone and not able to write more than this

Dj

I mentioned not supported because the camera control says not implemented.

@ptp correct, there is no mobile control for the camera. However, there is a camera object that can be added to the mobile interface.

You can find out what supported features exist here: https://synthiam.com/Tutorials/Help.aspx?id=196

Thanks @Mac for the very kind words. Coming from an Associate Professor of Art with the mechanical skill to build a car, this is a huge compliment! It's also nice to find another fan of LIS. You must be a big fan to have watched all three seasons that many times. I do wish the scripts were better though. Do you know NetFlix is going to to a reboot of the show in 2018? They already have most of the actors signed on. Can't wait.

More to this topic's point; I wish I had more to offer. I've been so busy getting the B9 built and basically working that I haven't really branched off into some of the other excellent features of the Ez Robot Platform. I'm extremely interested in this topic as I'm really looking forward to setting up a mobile interface to control some body movements along with the arms when I want. Again, I'm not sure what all I can accomplish with the Mobile App but it's going to be fun to learn. I also plan to find the best tracking method so my B9 can turn and follow as people walk by and respond when it notices movement or a face. this sounds a lot like what you're trying to do so I'll be watching and learning from the "Professor" if you are willing to share your process.

Good luck on your build and please keep us informed as you go.

More on the tracking with mobile topic...

Here's an exert from the JD project's Mobile Interface. This is the code which sits in the Checkbox for "Color Tracking".

Find out more about EZ-Script and ControlCommand() in the activity tutorial here: https://synthiam.com/Tutorials/Lesson/23?courseId=6

Of course, the camera control on the PC would need to have the servo/movement/scripts configured. Using the ControlCommand() you may enable/disable tracking types.

If your camera is to enable tracking right from the get-go, then simply add the ControlCommand() to enable tracking in the Connection Control, or have the checkbox set on the PC Camera control when the project is saved to the cloud.

@ptp I was just looking at the Surface. A nice little unit with a nice big price tag, lol. I'm wondering if could simply use the Surface to run the PC version of Builder. I kind of like the complex graphical look of Builder more than the look of the apps. And it might make me appear smarter than I really am blush

@guru Thanks for the links. I did see the controls list which is why I started going down this road in the first place but I was confused as to why I wasn't getting the control tabs. But it sounds like, from what DJ was saying, is that I can create my own. I did read about the check boxes, just wasn't sure what their purpose was.

@DJ Thanks for the input but I didn't mean to make you work on a Saturday eek I think I've been avoiding the ezScript tutes because I've spent the last couple of months trying to teach myself Arduino and got a little burnt-out. Then I found your site and I thought "Why the heck didn't I find this two months ago?!" Not time wasted though. I know a little syntax and command structure now. I can read an arduino sketch better than I can write one. I'll keep playing with the mobile interface builder and see what I come up with.

All great info. Thanks everyone :)

So if you're avoiding EZ-Script, that's okay. Simply save the Camera's Tracking State (i.e. the checkboxes on the tracking tab) when you save your project. Is your tracking going to move two servos (horizontal/vertical)?

If so, then that's quite simple and will never need to touch ez-script.

load camera control

select and START camera source device (i.e. ez-b v4 camera)

press GEAR icon to open configure menu

Select servo TRACKING checkbox

Specify the horizontal and vertical servos and their ranges

Save the config menu

Switch to TRACKING tab on the camera control

Select your desired tracking type

There, your robot is tracking.

Add a mobile interface control to the project,

Edit the mobile interface and add a camera to the view.

Also add a CONNECTION to the view

Save the mobile interface

Now all you have to do is save it to the cloud to load onto your device

Voila. You have a vision tracking mobile app

@Mac One bit of advice is don't over think things.... EZ Robot is as it's namesake suggests... easy.... Doing basic things like face tracking can literally as @DJ mentions, be a few clicks of the mouse....

Later on as you gain more experience... you can really rock your projects by learning ez scripting..... :)

Sorry fellas, I'm working on the spine and my hands are covered in clay. The white things are plastic "blanks" for the cell phones which will run videos of robots through the ages as well as videos of the myriad of ways we are desecrating the Earth (which is why the robots take over, to protect us from ourselves - in the Asimov story).

Okay DJ, I've done all that you outlined but in builder only. I mounted the camera to the servo (just a single servo for now, to move the head left and right) and it tracks colors and faces quite nicely.

So what you're saying is that the mobile Interface Builder reads from the ARC project that's currently open and adds the necessary controls? That's pretty darn cool.

I'll upload a vid of the project using the Arduino and a couple of ultrasonic sensors to track movement. It had problems that I couldn't iron out through code or hardware and I wanted to use a camera anyway, so here I am.

Thanks Richard, I like that approach. I'm usually the one lecturing my students to slow down, take their time. But I have to present this thing to the board of trusties in about two months and I still have about...mmm...four months of work to do eek

DJ, I'll figure this out, dude. It's Saturday and you're still young! Go party or something. I know I would if I could. ;)

Mac, that's a real neat project :D

To answer your question about the ARC project on mobile: Yes. All mobile projects are created in ARC PC first. Then saved to the EZ-Cloud, and finally loaded on the mobile device.

Once you have it working on the PC, simply add a Mobile Interface to the project, add the camera, follow the steps above to save the project and voila! :D

Young at heart, maybe! I've been waist deep in regular expression parsing for EZ-Script over the last 48 hours. No rest until science fiction becomes our reality with robots mingling with society!

@Dave "Do you know NetFlix is going to to a reboot of the show in 2018? They already have most of the actors signed on. Can't wait. "

OMG OMG OMG! That's awesome! Is Billy Mumy involved? I know he'd been wanting to do this for a long time but Irwin Allen wouldn't hear of it. I thought the movie was pretty good for a cheasy scifi with very little horror. I was disappointed when they didn't make another.

But just for the record, Star Trek comes first. It has since I was something like 7 years old. After watching all 3 LIS seasons a couple of times, it was easy to work and not have to pay close attention to the screen. It also made me feel like I wasn't all alone while putting in the bazillion hours working on the car.

Thanks DJ. Last question of the night: I found the minimum system requirements for the PC (i5) but how about for a tablet? I know my quad-core PC (laptop) starts dropping frames after a few minutes of running the camera.

Update: I got the mobile app working. Unbeknownst to me, I had it all there the first time I tried. I just didn't upload it because I thought I didn't have the controls. So yes, verified, write the program in Builder, add the Mobile Interface with the same gizmos, upload to the cloud. And as DJ says; voila!

My current Nook tablet is a bit too slow but I think the octocore should do the trick.

I'll update sculpture progress as it happens. My big challenge right now is getting this spine cast so it sits flat on the table/pedestal, houses the servo without in moving off-center, and holding the cell phones nice and tight.

I'm also trying to decide if I want to mount the EZ-b v4 on the back of the head/face Nook with or without the white housing. I'm leaning towards without.

Thank everyone. Have a good weekend. -Mac

Oh, almost forgot, here's a link to the first motion tracking attempt.

Thank goodness you found ez-robot :D

Very interesting stuff. When I was in college, I had a physics lab teacher who was an ary student. All her art was nased on science, and she always tried to show the beauty, or at least asthetic principles in the physics experiments we were performing. I think she would have loved this.

Alan

@ DJ Agreed!

@guru When I was a kid, I wanted to be a scientist (whatever that meant) but I couldn't do the math, lol. My early artwork used a lot of Van De Graaf generators and Whimshurst machines. It was....honestly, kind of bad. But it was fun to make :D

Hi Mac, great looking project! I'm wondering how the face tracking will respond when there are (as there surely will be) multiple faces peering at your work simultaneously?

aceboss, face tracking in ARC prioritizes the largest (closest) face to the camera. Unless Mac writes the code to use multiple faces, it defaults to 1 which is the closest (largest) face.

In ARC Camera Control, you can specify how many objects to detect, and there will be variables created for each object.

Awesome, thanks DJ!

Although I didn't know this was the answer, specifically, I did test the camera with several faces and that appeared to be the case. And that works well for this piece. I may also add in several colors to track and see how it behaves. Maybe even motion tracking. Anything to help it respond to the audience, even if they're not responding to IT. I've been meaning to submit an update and will in the next dew days. I was working and making progress and then Thanksgiving hit. I have a "chicken or the egg" obstacle right now. I can't move forward without building the pedestal, which will house most of the connections. But I can't build it until I know what all will be connected (etc.) and I won't know that until I have the pedestal built. Lol. That's happened a lot with this sculpture. I'll build one this week. If it works, great. If not, I'll build another and use the first for a house display of some kind.

Mac, Welcome to the world of Robotics. I'm always trying to resolve the chicken or egg question with my build. When I get the answer wrong (which happens all the time) I just rebuild and call it version 2. :P

Lol, V2, I like it!

Just some process pics. One is just a test fit of the cell phones and spine. Still some tweaking to do but the phones fit better than I could have hoped.

The mold is silicone with a plaster mother mold to keep it rigid. I cast the spine with a two part resin with black dye. Usually silicone gives multiple chances to get the cast right but I tore it up pretty good getting it out of the motor housing area at the top.

This makes me miss art college. Best days of my life! This is bloody brilliant mate!

Haha, thanks Quantumsheep. I don't know bout brilliant but it certainly has been bloody. I've been using a drill for 40 years and yesterday, drilling through the spine for the phone cords, the drill bit caught, grabbed, and shot through the spine and into my finger. Pretty sure I hit bone. Luckily the spine wasn't damaged. That particular hole was exactly what I needed.

This is going to be awesome. I can't wait to see it staring people down as they pass. Love your computer screen in the background in picture 3!

Oh, lol, I didn't notice that. It's the DVD menu for season 1 :D